the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Improving seasonal predictions of German Bight storm activity

Daniel Krieger

Sebastian Brune

Johanna Baehr

Ralf Weisse

Extratropical storms are one of the major coastal hazards along the coastline of the German Bight, the southeastern part of the North Sea, and a major driver of coastal protection efforts. However, the predictability of these regional extreme events on a seasonal scale is still limited. We therefore improve the seasonal prediction skill of the Max Planck Institute Earth System Model (MPI-ESM) large-ensemble decadal hindcast system for German Bight storm activity (GBSA) in winter. We define GBSA as the 95th percentiles of three-hourly geostrophic wind speeds in winter, which we derive from mean sea-level pressure (MSLP) data. The hindcast system consists of an ensemble of 64 members, which are initialized annually in November and cover the winters of 1960/61–2017/18. We consider both deterministic and probabilistic predictions of GBSA, for both of which the full ensemble produces poor predictions in the first winter. To improve the skill, we observe the state of two physical predictors of GBSA, namely 70 hPa temperature anomalies in September, as well as 500 hPa geopotential height anomalies in November, in areas where these two predictors are correlated with winter GBSA. We translate the state of these predictors into a first guess of GBSA and remove ensemble members with a GBSA prediction too far away from this first guess. The resulting subselected ensemble exhibits a significantly improved skill in both deterministic and probabilistic predictions of winter GBSA. We also show how this skill increase is associated with better predictability of large-scale atmospheric patterns.

- Article

(9665 KB) - Full-text XML

- BibTeX

- EndNote

The coastline of the German Bight, which is shared by the neighboring countries of Germany, Denmark, and the Netherlands, is frequently affected by strong extratropical cyclones and their accompanying hazards, such as storm surges. These extreme events repeatedly issue challenges to coastal protection agencies, emergency management, and other interests in the region. Therefore, local actors and stakeholders may benefit from skillful predictions of these events on a seasonal to decadal scale. Still, skillful predictions of storm activity on a regional scale are a challenging task, even with today's state-of-the-art modeling capabilities.

In the research field of seasonal predictions, considerable progress has been achieved over the course of the past decade. Several studies have demonstrated that current climate models show prediction skill for many large-scale atmospheric modes of the Earth system on timescales that go beyond the confines of conventional weather forecasting, as for example for the Northern Hemisphere winter climate (e.g., Fereday et al., 2012), the winter North Atlantic Oscillation (NAO; Athanasiadis et al., 2014; Scaife et al., 2014a; Dunstone et al., 2016; Athanasiadis et al., 2017) and its link to the stratosphere (Scaife et al., 2016), and to some extent the Arctic Oscillation (AO; Riddle et al., 2013; Kang et al., 2014). Further studies were able to show how this good representation of large-scale atmospheric drivers in seasonal prediction systems could be used to predict climate extremes such as windstorms in the northern extratropics (e.g., Renggli et al., 2011; Befort et al., 2018; Hansen et al., 2019; Degenhardt et al., 2022).

On a more local scale, a recent study focusing on the predictability of German Bight storm activity (GBSA) has indicated that, with a carefully chosen approach, a large model ensemble, and an evaluation of different forecast categories, probabilistic predictions of high storm activity can be skillful for averaging periods longer than 5 years (Krieger et al., 2022). Krieger et al. (2022) also showed, however, that the predictive skill for single lead years in general and the next year in particular is often low and barely statistically significant, even when using a large-ensemble decadal prediction system. Using the Met Office Global Seasonal Forecast System version 5 (GloSea5), Scaife et al. (2014a) found large areas of positive skill for winter storminess over the North Atlantic regions, but only non-significant correlations of 0.15–0.3 over the German Bight. Degenhardt et al. (2022) also found that, using a newer version of GloSea5, even though several storm metrics are skillfully predictable over large parts of the northeastern Atlantic Ocean, the skill for the German Bight is somewhat lower than in adjacent regions. While Krieger et al. (2022) did not explicitly investigate the predictability of GBSA on a seasonal scale, the low skill for lead year 1 warrants an investigation into the seasonal predictability of GBSA and its potential for improvement.

Even before the onset of advanced computational numerical modeling, methods were developed to advance the predictability of the climate system. Lorenz (1969) proposed the idea of analogue forecasting, a prediction method which builds on the hypothesis that two observed states of the atmosphere which closely resemble each other but are temporally disconnected (analogues) evolve in a similar manner. As the number of available observations, reanalyses, and climate model experiments has grown significantly over the last few decades, more data have become available that foster climate reconstruction and prediction attempts through this method (e.g., Van den Dool, 1994; Schenk and Zorita, 2012; Delle Monache et al., 2013; Menary et al., 2021). Closely related to analogue forecasting, another method has recently emerged which uses observable physical predictors of climate phenomena to estimate the future state of these phenomena. Previous studies using this technique have demonstrated that, on seasonal timescales, predictions for the state of large-scale modes of atmospheric variability like the NAO can be improved through the use of known atmospheric and oceanic teleconnections (e.g., Dobrynin et al., 2018). These studies used first-guess predictions based on the state of multiple physical predictors to refine large model ensembles and thereby reduce model spread. Similar ensemble subselection techniques have also been used to increase the predictability of the European summer climate (Neddermann et al., 2019) and European winter temperatures (Dalelane et al., 2020). This predictor-based ensemble subselection method, however, has not been applied to small-scale climate extremes like storm activity yet.

The storm climate of west-central Europe, and in particular the German Bight, is subject to a prominent multidecadal variability (e.g., Krueger et al., 2019; Krieger et al., 2021), which is arguably responsible for the comparably high predictability of GBSA a decadal scale, especially for multi-year averages (Krieger et al., 2022). Additionally, GBSA is connected to the large-scale atmospheric circulation in the Northern Hemisphere. GBSA has shown to correlate positively with the NAO; however the strength of this connection is subject to large fluctuations on a multidecadal scale. Other atmospheric phenomena during the winter season, such as the widely studied sudden stratospheric warmings, also play a role for the extratropical storm climate, since they influence the tropospheric weather regimes (e.g., Baldwin and Dunkerton, 2001; Song and Robinson, 2004; Domeisen et al., 2013, 2015) and are able to suppress or shift surface weather patterns in the mid-latitudes, sometimes even in a way that is contrary to the state of the NAO (Domeisen et al., 2020).

Peings (2019) found that a blocking pattern over the Ural region in November can be used to identify an increased likelihood of stratospheric warmings in the subsequent winter, which in turn favor blocking setups and thus lower-than-usual storm activity over west-central Europe. Siew et al. (2020) confirmed this connection to be part of a troposphere–stratosphere causal link chain with a typical timescale of 2–3 months. The results of Peings (2019) and Siew et al. (2020) suggest that the status of the Rossby wave pattern in November might be usable as a predictor for the German Bight storm climate in the subsequent winter season.

The state of the stratospheric polar vortex in winter has also been linked to the Quasi-Biennial Oscillation (QBO) via the Holton–Tan effect (e.g., Ebdon, 1975; Holton and Tan, 1980). The Holton–Tan effect proposes a connection between easterly QBO phases, which are characterized by easterly wind and negative temperature anomalies in the lower stratosphere, and a weakened stratospheric polar vortex and thus positive stratospheric temperature anomalies in the polar Northern Hemisphere. The mechanism behind this effect has been widely studied and confirmed, e.g., by Lu et al. (2014). While some studies have already looked into the simultaneous occurrence of QBO anomalies and shifts in the European winter climate and associated windows of opportunity for better predictability (e.g., Boer and Hamilton, 2008; Marshall and Scaife, 2009; Scaife et al., 2014b; Wang et al., 2018), the state of the tropical stratosphere has not been used as a predictor for the upcoming winter storm climate in west-central Europe yet.

In this paper, we thus show that the predictability of German Bight storm activity on a seasonal scale is inherently low but can be significantly improved through the combined use of tropospheric and stratospheric physical predictors. Drawing on the proposed links between the European winter storm climate and the Rossby wave pattern, as well as the state of the tropical stratosphere, we use temperature anomalies in the lower tropical stratosphere in September, as well as extratropical geopotential height anomalies in the middle troposphere in November, as predictors for GBSA. We generate first guesses of GBSA from these predictors and select members from our ensemble based on their proximity to the first guesses. From the large-ensemble prediction system with 64 members, we generate both deterministic and probabilistic predictions of winter GBSA, both for the full and the subselected ensemble, and analyze the improvement of GBSA predictability through the subselection process. We demonstrate how, compared to the low prediction skill of the full ensemble, the subselection technique significantly increases the prediction skill. The large size of the ensemble also enables a thorough sensitivity analysis of the dependency of the skill on the subselection size.

2.1 Storm activity observations

As an observational reference for storm activity in the German Bight, we make use of the time series of winter GBSA from Krieger et al. (2021). The GBSA proxy in Krieger et al. (2021) is defined as the standardized 95th seasonal (December–February, DJF) percentiles of geostrophic winds. These geostrophic wind speeds were originally calculated from three-hourly observations of mean sea-level pressure (MSLP) along the German Bight coast in Denmark, Germany, and the Netherlands and cover the period of 1897/98–2017/18.

2.2 MPI-ESM-LR decadal hindcasts

In this study, we employ the extended large-ensemble decadal hindcast system based on the Max Planck Institute Earth System Model (MPI-ESM) in low-resolution (LR) mode (Mauritsen et al., 2019; Hövel et al., 2022; Krieger et al., 2022). Even though this study focuses on the seasonal timescale, we choose decadal hindcasts over any seasonal prediction systems, as the already available MPI-ESM decadal hindcast system provides us with a large ensemble size. While the ensemble consists of 80 members in total, we base our analysis on those 64 members for which three-hourly output is available (see Krieger et al. (2022) for details). At the time of this study, we are not aware of any single-model seasonal prediction system of this ensemble size and with three-hourly MSLP output available.

The MPI-ESM is a coupled climate model with individual components for the atmosphere (ECHAM6; Stevens et al., 2013), ocean and sea ice (MPI-OM; Jungclaus et al., 2013), land surface (JSBACH; Reick et al., 2013; Schneck et al., 2013), and ocean biogeochemistry (HAMOCC; Ilyina et al., 2013). Here, we only use the atmospheric output from the ECHAM6 component, which provides us with data at a temporal resolution of 3 h, a horizontal resolution of 1.875°, as well as a vertical resolution of 47 levels between 0.1 hPa and the surface (Stevens et al., 2013). The hindcasts are initialized every 1 November from a 16-member assimilation run, starting in 1960. We use all hindcast runs initialized between 1960 and 2017 as the observational reference time series of winter GBSA ends in 2017/18.

2.3 German Bight storm activity (GBSA)

To quantify storm activity in this study, we draw on an established metric that uses the statistics of the hypothetical near-surface geostrophic wind speed which is obtained from horizonal gradients of MSLP (Schmidt and von Storch, 1993). Contrary to direct wind speed observations, which often show strong inhomogeneities, long MSLP records are usually more homogeneous and therefore better suited to provide information about the long-term storm climate (e.g., Alexandersson et al., 1998; Krueger and von Storch, 2011). Since winter GBSA is not directly available as an output variable of the hindcast system, we derive it from the three-hourly MSLP output (Krieger and Brune, 2022a). We calculate winter GBSA as the standardized seasonal (December–February) 95th percentiles of three-hourly geostrophic winds over the German Bight. For every ensemble member, we individually convert the horizontal differences of MSLP at three stations in the German Bight to geostrophic wind speeds at every time step. We then derive the 95th percentiles for every winter season and standardize them by subtracting the mean and dividing by the standard deviation of the winters 1960/61–2017/18 of the respective ensemble member. The calculation follows the methodology of Krieger et al. (2022); however it uses seasonal instead of annual 95th percentiles. Doing so, we ensure that the calculation of GBSA in the hindcast is consistent with the derivation of observed GBSA in Krieger et al. (2021). We perform the GBSA calculations individually for every member of the hindcast.

2.4 Predictors of GBSA

In this study, we aim to increase the predictability of winter GBSA by refining a large ensemble by selecting individual members that are closest to a first-guess prediction of winter GBSA. To achieve this, we first need to define predictors and the generation of first guesses.

We use fields of linearly detrended September 70 hPa temperature (T70) and November 500 hPa geopotential height (Z500) anomalies as our predictors for GBSA. The choice of the respective vertical levels (70 hPa for temperatures and 500 hPa for geopotential height), as well as the choice of September for T70, is based on a lead–lag correlation analysis between winter GBSA and temperature, as well as geopotential height fields at different levels and lead times. From this correlation analysis, September T70 and November Z500 emerged as the best-fitting combinations of lead time and vertical level (not shown). The data for these predictor fields are taken from the ERA5 reanalysis (Hersbach et al., 2020), which in its current state dates back to the year 1940. Anomalies are calculated by subtracting the 1940–2017 mean from the time series. We ensure that there are regions where the correlation coefficient between the predictor and GBSA is significantly different from zero over the whole investigation period (1940–2017 for predictors, winters 1940/41–2017/18 for GBSA).

In every prediction year, we generate a first guess of winter GBSA from the state of our chosen predictors. For each predictor xp, we first analyze which grid points show a locally significant positive correlation with GBSA for all years from 1940 to the year before the initialization (p≤0.05). The statistical significance of the correlation is determined through a grid-point-wise 1000-fold bootstrapping with replacement (Kunsch, 1989; Liu and Singh, 1992), where the 0.025 and 0.975 quantiles of bootstrapped correlations define the range of the 95 % confidence interval. If the 95 % confidence interval excludes a value of r=0, we consider the correlation for this grid point significant and that grid point is taken into account for the generation of a first guess. As both the anomalies of the predictors and the index of winter GBSA are defined as standardized anomalies following a Gaussian normal distribution with a mean of 0 and a standard deviation of 1, we can directly translate the state of each predictor into a first guess of our predictand GBSA. Therefore, we compute the first guess of the predictand (GBSA) as an area-weighted average yp of the state of the predictor xp for those grid points (i,j) that show a locally significant positive correlation with GBSA, following Eq. (1).

In Eq. (1), cos Φj denotes the cosine of the latitude of each grid point used as a weighting factor. For geopotential height anomalies, we constrain the region that can contribute to the first guess to the boreal extratropics between 30–90° N, as the pattern of geopotential height in this region describes the Rossby wave train which strongly governs the extratropical winter storm climate. We make sure that each predictor always contributes significantly positively correlated grid points in every prediction year, as the correlation strength and location of the significant correlations may vary from year to year.

In addition to the grid-point-wise significance test, we also test the fields of T70 and Z500 for global significance by controlling for the false detection rate (FDR; Wilks, 2006). We achieve this by ranking the p values of all n grid points from smallest (p(1)) to largest (p(n)), so that

Subsequently, we then individually test each p value against a threshold that is comprised of a predefined criterion of αFDR=0.05, scaled by the rank i of the respective p value and the total grid size n. Should a p value satisfy the condition

we consider the correlation at this point to be significant globally. Doing so, we are able to determine whether certain regions of our predictor fields show up as locally significant only due to spatial autocorrelation of the respective atmospheric fields. Please note that for the calculation of the predictor states, we still use information from all locally significant grid points, regardless of whether the respective grid points are globally significant or not.

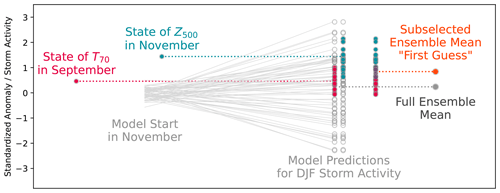

Figure 1Schematic depiction of the predictor-based subselection workflow, adapted from Dobrynin et al. (2018).

For every model run, we choose a number n of ensemble members in our forecast ensemble with a GBSA closest to the state of the two predictors T70 and Z500 in that respective year. Closeness is hereby defined as the absolute difference between the predicted GBSA of the respective member and the state of the predictor. Because we select n members twice in every run, i.e., once for every predictor, and the two selections of members might overlap, the size of this resulting subselection can vary between n members – if the states of T70 and Z500 are identical in that year – and 2n members – if the states of T70 and Z500 are far enough apart that there is no overlap between the selected members. From this resulting subselection, we then calculate deterministic and probabilistic GBSA predictions. A schematic overview of the predictor-based subselection is given in Fig. 1. Deterministic predictions are computed by averaging the GBSA predictions over all members in the subselection. For probabilistic predictions, we calculate the fraction of members within the subselection that exceed a defined threshold for high storm activity of 1 standard deviation above the long-term mean. It should be noted that selected members are weighted equally in all computations, even though some of them might have been selected by both predictors.

2.5 Skill metrics

To evaluate the improvement of prediction skill for winter GBSA, we first define separate skill metrics for deterministic and probabilistic model predictions.

We measure the skill of deterministic predictions with Pearson's anomaly correlation coefficient (ACC) and the root-mean-square error (RMSE) between predicted and observed quantities. The ACC is defined as

where fi and oi denote predictions and observations at a time step i, and and mark the long-term averages of predictions and observations. ACC values of 1 indicate a perfect correlation, 0 no correlation, and −1 a perfect anticorrelation. The statistical significance of the ACC is again determined through a 1000-fold bootstrapping with replacement and a significance criterion of p≤0.05.

The RMSE is calculated from the predicted and observed quantities fi and oi by

Probabilistic predictions of high storm activity are tested against a climatology-based reference prediction and evaluated with the strictly proper Brier skill score (BSS; Brier, 1950). The climatology-based reference prediction is constructed from the climatological frequencies of observed GBSA (e.g., Wilks, 2011). Here, we draw on the definition of GBSA from Krieger et al. (2021) which assumes an underlying Gaussian normal distribution.

We calculate the BSS as follows:

BS and BScli indicate the Brier scores of the probabilistic model prediction and the fixed climatological reference prediction, respectively. Positive values show that the model predictions perform better than the climatology-based predictions and vice versa. A BSS of 1 would indicate a perfect model prediction, i.e., all members of the ensemble predicting the occurrence or absence of a high-storm-activity event correctly in every year.

The individual Brier scores (BSs) are defined as

where Fi and Oi denote predictions and observations at a time step i. In the model, we calculate the predicted probability Fi from the fraction of ensemble members that predict a high-storm-activity event. For the climatology-based prediction, Fi is a fixed value. As high storm activity is defined via a threshold of 1 standard deviation above the mean state, we calculate the climatological probability of a high-storm-activity event occurring to be , where Φ(x) is the cumulative distribution function of the Gaussian normal distribution. This means that the probability of a random sample from a Gaussian normal distribution with a mean of μ and a standard deviation of σ being larger than μ+1σ is slightly less than 16 %. The observed probability Oi always takes on a value of either 1 or 0, depending on whether the event happened or not.

2.6 Training and hindcast periods

The recent backward extension of the ERA5 reanalysis extends the dataset back to 1940. Because the predictions of GBSA are based on predictors that are derived from regions where the predictor and GBSA correlate significantly, we require a sufficiently long training period to identify these regions before the start of the first model run. Hence, we classify the first 2 decades (1940–1959), for which only ERA5 and observational GBSA data are available, as the training-only period and start the actual predictor-based first guesses of GBSA in the year 1960. Doing so, we can ensure that we only use reanalysis data to predict GBSA that was already available at the start of the respective hindcast run but still use the full range of hindcasts which begin in 1960. The hindcast period, i.e., the period in which we predict GBSA and assess the skill of the model and the subselection, is thus confined to a total of 58 winters from 1960/61 to 2017/18.

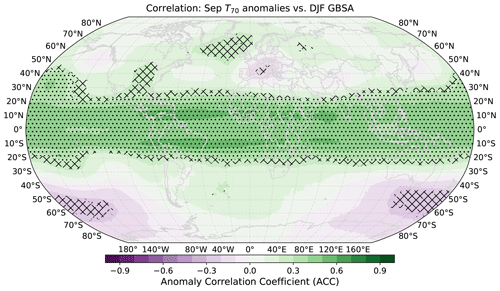

Figure 2Grid-point-wise correlation coefficients between global T70 anomalies in ERA5 and observed winter (DJF) German Bight storm activity. Period 1940–2017 for temperature anomalies, 1940/41–2017/18 for storm activity. Hatching indicates local statistical significance (p≤0.05) determined through 1000-fold bootstrapping. Stippling indicates additional global field significance by controlling for the FDR at a level of αFDR=0.05.

2.7 Composites

To check whether our prediction mechanism is also physically represented in the hindcast, we calculate composites of T70 and Z500 in the years with highest and lowest modeled DJF GBSA, respectively. We use all initialization years (1960–2019), all 64 members, and all lead years except the first one after the initialization (2–10), leaving us with 34 560 model years. From these 34 560 years, we select the 100 highest and lowest GBSA winters, compute composite mean fields of both predictors in the respective years preceding these winters, and calculate the difference between the composites of high and low GBSA. We then analyze the patterns of the composite differences to determine whether they resemble the correlation patterns between the predictors in ERA5 and observed DJF GBSA.

3.1 Correlations of predictor fields with winter storm activity

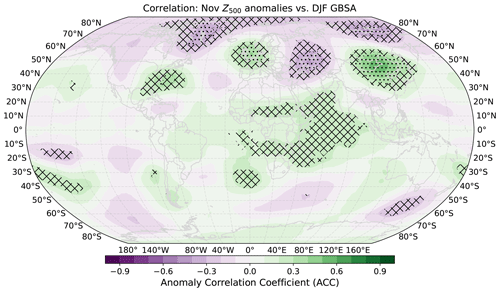

We identify T70 and Z500 anomalies as physical predictors for winter GBSA. To illustrate the connection between the global fields of these two predictors and storm activity, and to demonstrate which regions mainly contribute to the first-guess predictions, we correlate grid-point-wise time series of T70 and Z500 anomalies with observed winter GBSA for the entire time period of 1940–2017.

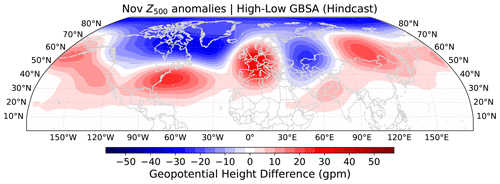

Figure 3Grid-point-wise correlation coefficients between global Z500 anomalies in ERA5 and observed winter (DJF) German Bight storm activity. Period 1940–2017 for geopotential height anomalies, 1940/41–2017/18 for storm activity. Hatching indicates local statistical significance (p≤0.05) determined through 1000-fold bootstrapping. Stippling indicates additional global field significance by controlling for the FDR at a level of αFDR=0.05.

The highest correlations between GBSA and T70 anomalies are found in the tropics in a circumglobal band between roughly 15° N and 15° S, with values as high as 0.5–0.6 (Fig. 2). Notably, correlations are slightly lower directly at the Equator than a few degrees north and south of it. Over Europe, a smaller region with slightly negative correlations is present, surrounded by slightly positive correlations to the northeast and northwest. Over the Southern Ocean, a signal of slightly negative correlations emerges as well. However, none of the regions outside of the tropics correlate with DJF GBSA as high as the tropics themselves. In total, 21.1 % of all locally significant grid points (or 6.8 % of all grid points) fail the global field significance test, indicating random correlation. Most of these grid points belong to regions outside the tropics, which reinforces the hypothesis that the tropical stratospheric temperatures show the strongest connection to winter GBSA.

For Z500 anomalies, the strongest positive correlations with winter GBSA are found over the British Isles and the adjacent northeastern Atlantic, as well as over east-central Asia and the US East Coast with peaks around 0.4 (Fig. 3). The strongest negative correlations emerge over east-central Europe, Greenland, and northeastern Siberia, reaching as low as −0.4. The correlation pattern in the boreal extratropics is in line with the findings of Peings (2019) and Siew et al. (2020) in a way that troughing (i.e., the opposite of ridging) over the Ural region and thus a reduced likelihood of stratospheric warmings in the following winter season is connected to higher-than-usual storm activity in the German Bight. These areas of significant correlations also strongly resemble a Rossby wave pattern which spans the boreal extratropics. Across the subtropical and tropical latitudes, some areas of slightly positive correlations can be found over the Sahel region and the Indian Ocean. Together with the negative correlations in the Arctic, these significant areas may be indicative of a relation to the Arctic Oscillation (AO; Thompson and Wallace, 1998). In the Southern Hemisphere, small patches of slightly positive and negative correlations are distributed circumglobally. However, the absolute correlations of the aforementioned regions in the tropics and the Southern Hemisphere are much lower than those in the northern extratropics. In total, 81.8 % of locally significant grid points (or 13.1 % of all grid points) fail the global field significance test, leaving just the regions associated with the Rossby wave pattern globally significant. This test supports the decision of only taking the boreal extratropics into account for the calculation of the Z500 predictor states.

3.2 Improvement of GBSA predictability

We use the established connection between T70 and Z500 anomalies and DJF German Bight storm activity to predict the storm activity of the upcoming winter season for the hindcast period of 1960–2017. We use latitude-weighted field means of T70 and Z500 in ERA5 as our initial guess for DJF storm activity. Since both the time series of temperature and geopotential height anomalies and those of GBSA are standardized, we do not need to apply a scaling factor to translate the field means of temperature and geopotential height anomalies to GBSA. We only use information from data between 1940 and the year of the start of the forecast. Thus, the number and distribution of grid points that are included in the calculation of the first-guess prediction can vary from year to year. To generate first-guess predictions of winter GBSA, we need to select a certain number of ensemble members closest to the initial guess for each predictor.

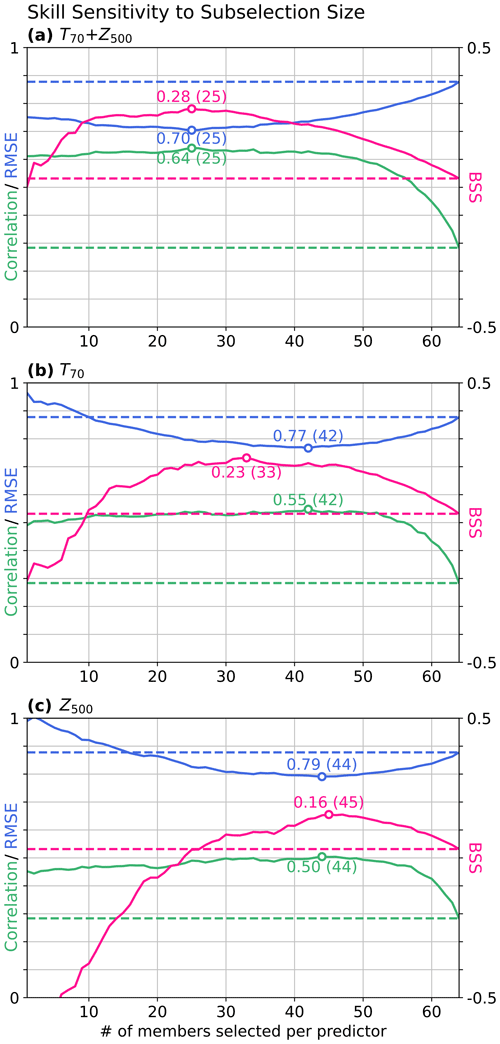

One degree of freedom in this process is the sampling size, i.e., the number of members selected for each predictor. The choice of this sampling size has an effect on the skill metrics of the subselected ensemble predictions. To illustrate the dependency of the model skill on the sample size, we test the correlation, RMSE, and high-activity BSS against climatology for all sample sizes between 1 and 64 (Fig. 4a) for the hindcast period of 1960–2017. Furthermore, we perform these sensitivity studies for both predictors individually to show how the combined use of both predictors changes the skill compared to just using one of the two (Fig. 4b and c).

Figure 4Dependency of various skill scores (ACC, green; RMSE, blue; and BSS for high storm activity against climatology, pink) of model ensemble predictions of DJF GBSA on the sample size chosen for each predictor during the subselection. The subselection is performed based on (a) both predictors, (b) only T70, and (c) only Z500. Dashed baselines show the respective skill scores of the full 64-member ensemble. Optimal skill scores (highest ACC and BSS, lowest RMSE) are displayed as annotated dots, together with the optimal sample size in brackets.

The sensitivity analysis for the combined use of both predictors (Fig. 4a) shows a strong increase in correlation to above 0.6 for up to roughly 50 members. This indicates that removing only about one-sixth of all members per predictor is sufficient to increase the correlation between the deterministic prediction and observations significantly. The optimal sample size for correlations is found at 25 members per predictor (r=0.64). For the RMSE, smaller sample sizes between 10 and 40 members yield the biggest improvement, with an optimum at 25 members (RMSE=0.70). The BSS can be maximized by selecting 25 members for each predictor as well (BSS=0.28) and shows a similar window of opportunity as the RMSE between 10 and 40 members. The sensitivity analysis for T70 alone (Fig. 4b) reveals a slightly lower potential for probabilistic skill improvements. Here, the BSS can be increased to 0.23 with a sample size of 33 members, but a deterioration of the BSS compared to the full ensemble occurs below 10 members. Similarly, choosing Z500 alone (Fig. 4c) only improves probabilistic forecasts when selecting more than 25 members with a maximum of 0.16 at 45 members.

The deterministic skill metrics also show similar windows of opportunity for both predictors individually. While correlation and RMSE for Z500 are maximized at sample sizes of 44 members (r=0.5, RMSE=0.79), the optimum for T70 is located at 42 members (r=0.55, RMSE=0.77). It should be noted that, for both predictors, the optimal sample sizes for RMSE and correlation are equal, since the correlation coefficient and RMSE are directly related for standardized sets of forecasts and observations (Barnston, 1992). Just like for the BSS, the individual contributions of the predictors to correlation and RMSE are smaller than the combined effect, manifesting the need to combine multiple predictors in the subselection to achieve the best possible skill increase.

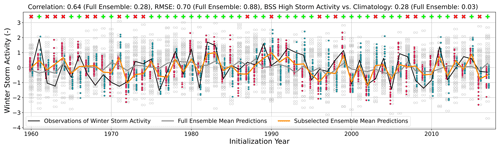

Figure 5Predictions of DJF GBSA by the 64-member ensemble mean (gray line), the subselected ensemble mean (orange line), and observed DJF GBSA (black line). Period 1960–2017 for model initializations, 1960/61–2017/18 for storm activity observations. Circles indicate GBSA predictions of individual members; colored circles indicate the selected 25 members closest to the first-guess predictions based on T70 (red) and Z500 (teal). Green plus signs and red “x” markers denote forecasts where the subselection is closer to or further away from the observation than the full ensemble.

From the sensitivity study, we find that sample sizes of 20–30 members constitute a fair compromise between the optimal sample sizes of deterministic and probabilistic predictions. Therefore, we exemplarily analyze the prediction of winter GBSA in the hindcast period for a subselection size of 25 members per predictor in greater detail (Fig. 5).

Over the forecast period, the first-guess estimates obtained from combining T70 and Z500 anomalies and observed winter GBSA correlate well (0.64), an improvement of 0.36 from the deterministic full-ensemble model prediction. The subselected ensemble captures the variability in DJF GBSA much better than the full 64-member ensemble. High agreements between first-guess predictions and observations are found in the late 1970s, the 1980s, and between the mid-1990s and the mid-2000s. With an RMSE of 0.70, the subselection-based prediction shows a slightly lower error than the full ensemble (0.88). Furthermore, the BSS against climatology of the reduced ensemble for high storm activity predictions is greatly increased to 0.28, compared to 0.03 for the full 64-member ensemble. In 39 out of the 58 individual predictions (67 %), the subselection leads to an improvement in the prediction as measured by the absolute difference between ensemble mean and observations.

Overall, all three metrics show a significant improvement for the first-guess-based reduced ensemble, revealing that both deterministic and probabilistic storm activity predictions can be significantly improved by the combined inclusion of T70 and Z500 as physical predictors.

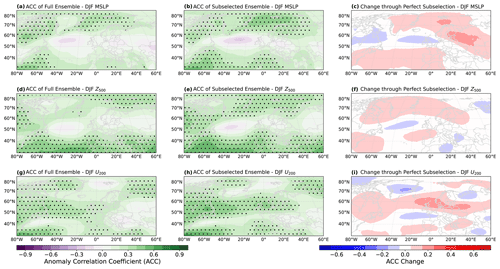

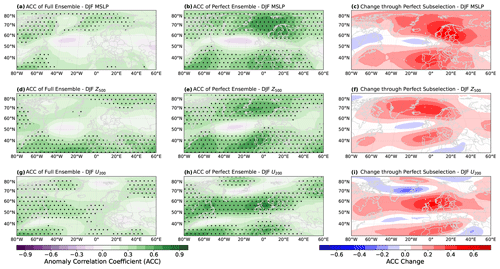

Figure 6Anomaly correlation coefficients (ACC) for ensemble mean predictions of the full 64-member ensemble (a, d, and g), the 25-member subselection (b, e, and h), and the change in ACC between the full and subselected ensemble (c, f, and i) for winter-mean (DJF) MSLP anomalies (a–c), 500 hPa geopotential height anomalies (Z500, d–f), and 200 hPa zonal wind anomalies (U200, g–i). Winter-mean anomalies are calculated by averaging monthly anomalies from December, January, and February. Period 1960/61–2017/18. Stippling indicates statistical significance (p≤0.05) determined through 1000-fold bootstrapping.

3.2.1 Skill increase for large-scale atmospheric variables

In order to determine on a physical basis why the subselected ensemble shows a higher prediction skill for GBSA in both deterministic and probabilistic modes, we analyze the change in ACC between the full ensemble mean and the mean of the subselected ensemble for three atmospheric variables that can be associated with the state of the winter climate over Europe (Fig. 6). We choose one variable that we also use for the ensemble subselection, winter-mean 500 hPa geopotential height (Z500), and two variables that are not included in the ensemble subselection, namely winter-mean MSLP and 200 hPa zonal wind (U200). Variations in MSLP indicate the prevalent distribution of high and low pressure areas, which directly influence the near-surface wind speed and can be indicative of the mean wind climate during winter. The field of Z500 provides insight into the state of the Rossby wave pattern in winter and whether the large-scale mid-tropospheric flow diverts storms away from or towards the German Bight. The location and strength of the polar jet stream, expressed as U200, govern the lower tropospheric setup and can enhance or suppress the formation of storms.

Figure 7Like Fig. 6 but for a perfect test; i.e., the 25 members closest to the actually observed GBSA are selected.

We find that the full ensemble shows significant skill for deterministic winter MSLP forecasts north of 60° N, as well as for winter Z500 south of 45° N, but limited skill for both MSLP and Z500 over west-central Europe and the adjacent region of the North Atlantic Ocean (Fig. 6a and d). The subselected ensemble shows a slightly higher skill for MSLP over Scandinavia and the Iberian Peninsula, but not over the German Bight and more generally west-central Europe (Fig. 6b and c). The skill of the subselection for Z500 is also slightly improved from Greenland to northern Scandinavia (Fig. 6e and f). Despite not showing an improvement over the German Bight, higher skill north and south of the German Bight indicates an increase in the predictability of the meridional gradient of MSLP and Z500, which is crucial to more accurately predict the wind climate in the German Bight. For U200, the full ensemble shows significant skill in a mostly zonally oriented band spanning from the North Atlantic around 55° N into west-central Europe (Fig. 6g). Notably, positive correlations are located closer to the German Bight than for MSLP and Z500. The subselected ensemble mostly retains this correlation pattern but extends the significant skill across the German Bight into east-central Europe (Fig. 6h and i). The improvement in predictability of U200, which is associated with the strength and location of the jet stream, is in accordance with the improvement in GBSA prediction skill, as the jet stream governs the formation and intensification of extratropical cyclones.

3.2.2 Potential capabilities of the model (perfect test)

To determine the theoretical maximum of skill improvement that the model could achieve, we perform a perfect test. Instead of choosing the 25 members closest to the first-guess winter GBSA determined from the two respective predictors as in the previous section, the perfect test selects those 25 members in each forecast that are closest to the actually observed winter GBSA. We refer to the set of these members selected in the perfect test as the perfect ensemble. For this perfect ensemble, we again analyze the change in ACC for MSLP, U200, and Z500 (Fig. 7). Note that operationally the perfect test would require information from the future, as the selection of a perfect ensemble at the start of the forecast in November relies on observational data which are not available until the end of February of the following year. For this reason, the perfect test is merely a tool of retrospective model evaluation and can not be replicated operationally. Again, we find that the greatest skill increases occur in regions where the full ensemble already showed significant skill. For MSLP and Z500, the skill north and south of the German Bight and therefore the predictability of the meridional gradient is significantly improved, while the skill in a region near and slightly west of the German Bight is almost unaffected by selecting the perfect ensemble (Fig. 7b, c, e, and f). Even with knowledge of future GBSA, the perfect ensemble is not able to significantly improve predictions of MSLP and Z500 in the same area. The perfect ensemble also improves U200 predictability over regions where the full ensemble already showed skill, i.e., mostly between 50 and 65° N (Fig. 7h and i).

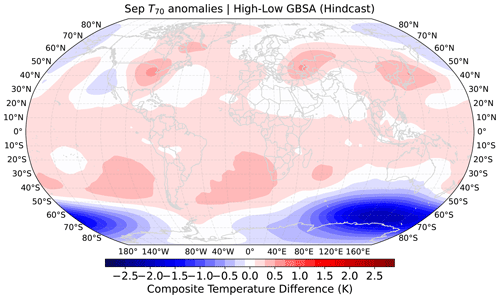

Figure 8Composite mean T70 of 100 model years with the highest subsequent DJF GBSA minus composite mean T70 of 100 model years with the lowest subsequent DJF GBSA in MPI-ESM-LR decadal hindcast runs. Data are taken from all initializations, all members, and all lead years except for the first year after initialization.

Figure 9Composite mean Z500 of 100 model years with the highest subsequent DJF GBSA minus composite mean Z500 of 100 model years with the lowest subsequent DJF GBSA in MPI-ESM-LR decadal hindcast runs. Data are taken from all initializations, all members, and all lead years except for the first year after initialization.

Generally, the patterns of skill increase through ensemble subselection are similar for the non-cheating hindcast and the perfect ensemble. The major difference between the two modes is that the increase in predictability of MSLP, Z500, and U200 is much larger in the perfect ensemble, which is to be expected as the model is able to use information from the future. From the similarity of the skill improvement patterns, however, we construe that the improvement of GBSA prediction skill through subselecting members is consistent with the physical mechanisms behind the extratropical winter storm climate and their predictability. The strong contrast in the magnitude of skill improvement points out the potential of the ensemble for even better predictions of the extratropical winter climate. However, additional research into more sophisticated ensemble refinement techniques, possibly also including the involvement of machine learning, is required to make use of this potential.

3.3 Representation of the mechanisms in the model

Figures 8 and 9 show differences in composite mean modeled T70 and Z500 fields between years prior to modeled high- and low-storm-activity winters. The patterns of T70 differences (Fig. 8) barely resemble the observed correlation patterns that are apparent between reanalyzed T70 fields and DJF GBSA observations (see Fig. 2). Differences in the tropics, where observed correlations are highest, hardly exceed 0.3 K. In contrast, negative differences of up to −2 K, i.e., lower T70 preceding high DJF GBSA, emerge in the austral extratropics, where slightly negative correlations can also be found in the observations. Overall, the model appears to be incapable of reproducing the pathway from stratospheric temperature anomalies in September to changes in the extratropical winter storm climate in the German Bight.

The patterns in the composite differences of Z500 (Fig. 9), however, demonstrate a fair agreement with observed correlation patterns (see Fig. 3). Before high-storm-activity winters, geopotential heights in the model are up 30 gpm higher over the US East Coast, west-central Europe, and northeastern Asia than before low-storm-activity winters. Similarly, up to 30 gpm lower geopotential heights are modeled over Canada, Greenland, the Ural region, and the Arctic in years prior to high-storm-activity winters. These regions of largest geopotential height differences match the regions of significant correlations between Z500 in ERA5 and observed DJF GBSA. We thus conclude that the physical link between November geopotential height anomalies and subsequent DJF GBSA is very well modeled by the hindcast system.

We use a decadal prediction system for seasonal predictions because we want to make use of the large ensemble size and the high temporal resolution of the model output. While a seasonal prediction system would be sufficient for this analysis, we are not aware of any available seasonal single-model initialized large ensembles with 64 members and three-hourly MSLP output. In addition, the use of the MPI-ESM-LR decadal prediction system allows us to directly compare the predictability for the first winter to the results from Krieger et al. (2022). We find that the full-ensemble prediction skill for winter GBSA (r=0.28, BSS=0.03) is close to what Krieger et al. (2022) found for lead-year-1 predictions of annual GBSA. This similarity is explainable by the higher wind speeds of storms in winter and thus the higher contribution of the winter season to annual storm activity than the remaining seasons.

Furthermore, the MPI-ESM-LR decadal hindcast offers a total of about 60 initialization years, while the corresponding seasonal prediction system based on the MPI-ESM-LR only covers about 40 initialization years. Additionally, the backward extension of ERA5 to 1940 allows us to define the initial training period, which is the period from which we determine areas of significant correlation between GBSA and the predictors before the first hindcast run by 2 decades, which fully precede the hindcast. Thus, we are able to generate predictor-based first guesses for almost 6 decades of hindcast initializations to test the skill of the model, while the seasonal system (in Dobrynin et al., 2018) only allowed for a hindcast period of 2 decades.

The improved prediction skill for winter GBSA (r=0.64) is higher than what is typically achieved with full-ensemble seasonal or decadal prediction systems, especially in the German Bight, where previous studies on large-scale winter storm activity have demonstrated shortcomings of seasonal (e.g., Scaife et al., 2014a; Degenhardt et al., 2022) and decadal prediction systems (e.g., Kruschke et al., 2014, 2016; Moemken et al., 2021). This high skill for storm activity is especially impressive considering the comparably low ACC of both the full and even the subselected ensemble for winter MSLP over Europe. Here, the winter MSLP ACC values remain below ACCs of, for example, a multisystem seasonal prediction of MSLP (Athanasiadis et al., 2017) and are closer to those found by Athanasiadis et al. (2020) on the decadal scale.

While our subselection increases the skill quite notably, there is still room for more improvement. This becomes especially apparent in the perfect test plots, where the potential perfect ACC increase for associated physical parameters like Z500, U200, and MSLP is a lot larger compared to our predictor-based ensemble subselection. A possible method to further improve the predictability and to rely more on the model physics would be checking which members actually predicted the observed patterns in November correctly and subselect those members. However, a test which replaces the members closest to the state of the Z500 predictor field with those members that exhibit the highest pattern correlations with observed Z500 fields in November results in a smaller increase in prediction skill for GBSA (not shown). We argue that the ensemble spread in November (i.e., directly after the initialization) is too low to objectively distinguish “good” from “bad” members. This method of refining the ensemble based on the predictions of observed patterns would become more feasible if the ensemble were initialized earlier than in November or if the winter prediction were supposed to be updated during the winter, based on, for instance, the model representation of certain observed atmospheric fields in December or January.

The correlation between temperature anomalies in the tropical stratosphere and GBSA is notably higher than the correlation between the same predictor and both the wintertime North Atlantic Oscillation (NAO) and Arctic Oscillation indices, two climate modes representative of the larger-scale atmospheric circulation over the northern mid and high latitudes. We argue that the increased correlation with GBSA is caused by the strong multidecadal signal within both the tropical stratosphere and GBSA which appears to be in phase over the investigated period. While GBSA is also connected to the NAO and the AO to a certain degree with correlations of 0.51 (NAO) and 0.40 (AO) for 1960/61–2017/18, the connection to the NAO has been shown to fluctuate over time (e.g., Krieger et al., 2021). In the 1960s, the running correlation between GBSA and the NAO index reached its minimum at values below 0.2, indicating that the decadal to multidecadal signals in both time series appear to move out of phase at times. This implies that even with an almost perfect forecast of the NAO, as for instance achieved by Dobrynin et al. (2018), a equally good prediction of GBSA cannot be guaranteed. Furthermore, we conclude that, while Scaife et al. (2014a) attribute a significant fraction of prediction skill for winter storm activity to the predictability of the NAO, our predictors may be better suited for direct GBSA predictions without simultaneously improving NAO predictions as well.

Since this is a single-model study based on the MPI-ESM-LR, our findings are model-specific. Therefore, the conclusions we draw are true for this model and the associated model physics. However, because the subselection process is purely based on the statistical relationship between reanalysis data and observations, it could also work in other large model prediction ensembles, as long as the internal variability in the ensemble encompasses the natural variability of GBSA.

We confirmed the connection between GBSA and the two chosen predictors through correlation analysis based on the ERA5 reanalysis. To ensure that the choice of reanalysis does not bias our results, we performed the correlation analysis between the predictor fields and GBSA in the NCEP-NCAR reanalysis (Kalnay et al., 1996) for the winters 1948/49–2017/18 and found similar patterns of correlations (not shown).

Despite having increased the predictability for the first winter on a seasonal scale, the decadal skill matrix for annual GBSA in Krieger et al. (2022) presents more lead times with poor predictability between lead year 1 and longer averaging periods. Using tropospheric patterns as predictors for longer lead times than the first winter is unphysical given the short memory of the troposphere. Therefore, new predictors (e.g., sea surface temperature) would need to be tested and used for an improvement of the GBSA prediction skill beyond the first winter. Alternatively, the model could be optimized to skillfully predict the state of the tropical stratosphere beyond the first year, for example via an accurate representation of the QBO. Such a prediction would then still require a statistical approach to link the QBO to GBSA, since we showed that the pathway from the tropical stratosphere to the extratropics in the boreal winter is poorly represented in the model (Fig. 8). Looking beyond the predictability of GBSA, the ensemble subselection method may be usable to improve the predictability of other climate extremes that can be associated with physical precursors. As long as the internal variability of a prediction system is able to capture the variability of the predicted event or extreme, and precursors with a stationary link to the event are found, an improvement of the prediction skill appears feasible. This method is also not limited to a certain timescale, so that the same approach may be usable not only in decadal prediction, but also in subseasonal or weather prediction. Any further analysis in this direction, however, is beyond the scope of this study.

We showed that the ensemble subselection technique first proposed by Dobrynin et al. (2018) can be applied to large-ensemble predictions of small-scale climate extremes. Using September T70 and November Z500 anomalies as predictors, we were able to increase the prediction skill of the MPI-ESM-LR large-ensemble decadal prediction system for winter GBSA for both deterministic and probabilistic predictions over a hindcast period of 58 winters. Compared to the inherently low prediction skill of the full ensemble, the subselection adds value to the seasonal predictability of GBSA by improving the ACC from 0.28 to 0.64, RMSE from 0.88 to 0.70, and BSS for high storm activity against climatology from 0.03 to 0.28. The sensitivity analysis showed that the improvement of skill metrics depends on the size of the subselection and on the combination of predictors. We also showed that the skill gain can be explained through physical mechanisms, as the subselected ensemble also displays a higher ACC for deterministic predictions of winter-mean U200 over the German Bight, as well as for the meridional gradient of MSLP and Z500 over north-central Europe, all of which are closely related to the European winter storm climate.

ERA5 reanalysis products that were used to support this study are available from the Copernicus Data Store at https://doi.org/10.24381/cds.6860a573 (Hersbach et al., 2023). The three-hourly German Bight MSLP output data from the decadal prediction system are available at http://hdl.handle.net/21.14106/04bc4cb2c0871f37433a73ee38189690955e1f90 (Krieger and Brune, 2022a). Monthly means of MSLP, T70, Z500, and U200 from the decadal prediction system are available at https://hdl.handle.net/21.14106/c69ceecb1584cc50247ae6e492fb1ef33e65ac37 (Brune et al., 2022). Computed German Bight storm activity time series from the decadal prediction system are available at http://hdl.handle.net/21.14106/e14ca8b63ccb46f2b6c9ed56227a0ac097392d0d (Krieger and Brune, 2022b).

DK, JB, and RW designed the presented study. SB provided model data from the MPI-ESM hindcast experiments. DK analyzed the data and created the figures. All authors discussed the results. DK took the lead in writing the manuscript with input from all co-authors.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This work has been developed in the project WAKOS – Wasser an den Küsten Ostfrieslands. WAKOS is financed with funding provided by the German Federal Ministry of Education and Research (BMBF; Förderkennzeichen 01LR2003A). Johanna Baehr was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany's Excellence Strategy – EXC 2037 “CLICCS – Climate, Climatic Change, and Society” – project number: 390683824, contribution to the Center for Earth System Research and Sustainability (CEN) of Universität Hamburg. Johanna Baehr and Sebastian Brune were supported by Copernicus Climate Change Service, funded by the EU, under contract C3S2-370. We thank the German Computing Center (DKRZ) for providing their computing resources.

We thank the three reviewers for their valuable comments on the manuscript, as well as Joaquim Pinto for editing the paper.

This research has been supported by the Bundesministerium für Bildung und Forschung (grant no. 01LR2003A).

The article processing charges for this open-access publication were covered by the Helmholtz-Zentrum Hereon.

This paper was edited by Joaquim G. Pinto and reviewed by Lisa Degenhardt and two anonymous referees.

Alexandersson, H., Schmith, T., Iden, K., and Tuomenvirta, H.: Long-term variations of the storm climate over NW Europe, Global Atmosphere and Ocean System, 6, 97–120, 1998. a

Athanasiadis, P. J., Bellucci, A., Hermanson, L., Scaife, A. A., MacLachlan, C., Arribas, A., Materia, S., Borrelli, A., and Gualdi, S.: The Representation of Atmospheric Blocking and the Associated Low-Frequency Variability in Two Seasonal Prediction Systems, J. Climate, 27, 9082–9100, https://doi.org/10.1175/jcli-d-14-00291.1, 2014. a

Athanasiadis, P. J., Bellucci, A., Scaife, A. A., Hermanson, L., Materia, S., Sanna, A., Borrelli, A., MacLachlan, C., and Gualdi, S.: A Multisystem View of Wintertime NAO Seasonal Predictions, J. Climate, 30, 1461–1475, https://doi.org/10.1175/jcli-d-16-0153.1, 2017. a, b

Athanasiadis, P. J., Yeager, S., Kwon, Y.-O., Bellucci, A., Smith, D. W., and Tibaldi, S.: Decadal predictability of North Atlantic blocking and the NAO, npj Clim. Atmos. Sci., 3, 20, https://doi.org/10.1038/s41612-020-0120-6, 2020. a

Baldwin, M. P. and Dunkerton, T. J.: Stratospheric Harbingers of Anomalous Weather Regimes, Science, 294, 581–584, https://doi.org/10.1126/science.1063315, 2001. a

Barnston, A. G.: Correspondence among the Correlation, RMSE, and Heidke Forecast Verification Measures; Refinement of the Heidke Score, Weather Forecast., 7, 699–709, https://doi.org/10.1175/1520-0434(1992)007<0699:catcra>2.0.co;2, 1992. a

Befort, D. J., Wild, S., Knight, J. R., Lockwood, J. F., Thornton, H. E., Hermanson, L., Bett, P. E., Weisheimer, A., and Leckebusch, G. C.: Seasonal forecast skill for extratropical cyclones and windstorms, Q. J. Roy. Meteor. Soc., 145, 92–104, https://doi.org/10.1002/qj.3406, 2018. a

Boer, G. J. and Hamilton, K.: QBO influence on extratropical predictive skill, Clim. Dynam., 31, 987–1000, https://doi.org/10.1007/s00382-008-0379-5, 2008. a

Brier, G. W.: Verification of forecasts expressed in terms of probability, Mon. Weather Rev., 78, 1–3, https://doi.org/10.1175/1520-0493(1950)078<0001:VOFEIT>2.0.CO;2, 1950. a

Brune, S., Pohlmann, H., Müller, W., Nielsen, D. M., Hövel, L., Koul, V., and Baehr, J.: MPI-ESM-LR_1.2.01p5 decadal predictions localEnKF large ensemble: monthly mean values members 17 to 80, DOKU at DKRZ [data set], https://hdl.handle.net/21.14106/c69ceecb1584cc50247ae6e492fb1ef33e65ac37 (last access: 18 July 2023), 2022. a

Dalelane, C., Dobrynin, M., and Fröhlich, K.: Seasonal Forecasts of Winter Temperature Improved by Higher-Order Modes of Mean Sea Level Pressure Variability in the North Atlantic Sector, Geophys. Res. Lett., 47, e2020GL088717, https://doi.org/10.1029/2020gl088717, 2020. a

Degenhardt, L., Leckebusch, G. C., and Scaife, A. A.: Large-scale circulation patterns and their influence on European winter windstorm predictions, Clim. Dynam., 60, 3597–3611, https://doi.org/10.1007/s00382-022-06455-2, 2022. a, b, c

Delle Monache, L., Eckel, F. A., Rife, D. L., Nagarajan, B., and Searight, K.: Probabilistic Weather Prediction with an Analog Ensemble, Mon. Weather Rev., 141, 3498–3516, https://doi.org/10.1175/mwr-d-12-00281.1, 2013. a

Dobrynin, M., Domeisen, D. I. V., Müller, W. A., Bell, L., Brune, S., Bunzel, F., Düsterhus, A., Fröhlich, K., Pohlmann, H., and Baehr, J.: Improved Teleconnection-Based Dynamical Seasonal Predictions of Boreal Winter, Geophys. Res. Lett., 45, 3605–3614, https://doi.org/10.1002/2018gl077209, 2018. a, b, c, d, e

Domeisen, D. I. V., Sun, L., and Chen, G.: The role of synoptic eddies in the tropospheric response to stratospheric variability, Geophys. Res. Lett., 40, 4933–4937, https://doi.org/10.1002/grl.50943, 2013. a

Domeisen, D. I. V., Butler, A. H., Fröhlich, K., Bittner, M., Müller, W. A., and Baehr, J.: Seasonal Predictability over Europe Arising from El Niño and Stratospheric Variability in the MPI-ESM Seasonal Prediction System, J. Climate, 28, 256–271, https://doi.org/10.1175/JCLI-D-14-00207.1, 2015. a

Domeisen, D. I. V., Grams, C. M., and Papritz, L.: The role of North Atlantic–European weather regimes in the surface impact of sudden stratospheric warming events, Weather Clim. Dynam., 1, 373–388, https://doi.org/10.5194/wcd-1-373-2020, 2020. a

Dunstone, N., Smith, D., Scaife, A., Hermanson, L., Eade, R., Robinson, N., Andrews, M., and Knight, J.: Skilful predictions of the winter North Atlantic Oscillation one year ahead, Nat. Geosci., 9, 809–814, https://doi.org/10.1038/ngeo2824, 2016. a

Ebdon, R.: The Quasi-Biennial Oscillation and its association with tropospheric circulation patterns, Meteorol. Mag., 104, 282–297, 1975. a

Fereday, D. R., Maidens, A., Arribas, A., Scaife, A. A., and Knight, J. R.: Seasonal forecasts of northern hemisphere winter 2009/10, Environ. Res. Lett., 7, 034031, https://doi.org/10.1088/1748-9326/7/3/034031, 2012. a

Hansen, F., Kruschke, T., Greatbatch, R. J., and Weisheimer, A.: Factors Influencing the Seasonal Predictability of Northern Hemisphere Severe Winter Storms, Geophys. Res. Lett., 46, 365–373, https://doi.org/10.1029/2018gl079415, 2019. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horányi, A., Muñoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Hólm, E., Janisková, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thépaut, J.-N.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a

Hersbach, H., Bell, B., Berrisford, P., Biavati, G., Horányi, A., Muñoz Sabater, J., Nicolas, J., Peubey, C., Radu, R., Rozum, I., Schepers, D., Simmons, A., Soci, C., Dee, D., Thépaut, J.-N.: ERA5 monthly averaged data on pressure levels from 1940 to present, Copernicus Climate Change Service (C3S) Climate Data Store (CDS) [data set], https://doi.org/10.24381/cds.6860a573, 2023. a

Holton, J. R. and Tan, H.-C.: The Influence of the Equatorial Quasi-Biennial Oscillation on the Global Circulation at 50 mb, J. Atmos. Sci., 37, 2200–2208, https://doi.org/10.1175/1520-0469(1980)037<2200:tioteq>2.0.co;2, 1980. a

Hövel, L., Brune, S., and Baehr, J.: Decadal Prediction of Marine Heatwaves in MPI-ESM, Geophys. Res. Lett., 49, e2022GL099347, https://doi.org/10.1029/2022gl099347, 2022. a

Ilyina, T., Six, K. D., Segschneider, J., Maier-Reimer, E., Li, H., and Núñez-Riboni, I.: Global ocean biogeochemistry model HAMOCC: Model architecture and performance as component of the MPI–Earth system model in different CMIP5 experimental realizations, J. Adv. Model. Earth Sy., 5, 287–315, https://doi.org/10.1029/2012MS000178, 2013. a

Jungclaus, J. H., Fischer, N., Haak, H., Lohmann, K., Marotzke, J., Matei, D., Mikolajewicz, U., Notz, D., and Storch, J. S.: Characteristics of the ocean simulations in the Max Planck Institute Ocean Model (MPIOM) the ocean component of the MPI–Earth system model, J. Adv. Model. Earth Sy., 5, 422–446, https://doi.org/10.1002/jame.20023, 2013. a

Kalnay, E., Kanamitsu, M., Kistler, R., Collins, W., Deaven, D., Gandin, L., Iredell, M., Saha, S., White, G., Woollen, J., Zhu, Y., Leetmaa, A., Reynolds, R., Chelliah, M., Ebisuzaki, W., Higgins, W., Janowiak, J., Mo, K. C., Ropelewski, C., Wang, J., Jenne, R., and Joseph, D.: The NCEP/NCAR 40-Year Reanalysis Project, B. Am. Meteorol. Soc., 77, 437–471, https://doi.org/10.1175/1520-0477(1996)077<0437:tnyrp>2.0.co;2, 1996. a

Kang, D., Lee, M., Im, J., Kim, D., Kim, H., Kang, H., Schubert, S. D., Arribas, A., and MacLachlan, C.: Prediction of the Arctic Oscillation in boreal winter by dynamical seasonal forecasting systems, Geophys. Res. Lett., 41, 3577–3585, https://doi.org/10.1002/2014gl060011, 2014. a

Krieger, D. and Brune, S.: MPI-ESM-LR1.2 decadal hindcast ensemble 3-hourly German Bight MSLP, DOKU at DKRZ [data set], http://hdl.handle.net/21.14106/04bc4cb2c0871f37433a73ee38189690955e1f90 (last access: 20 February 2024), 2022a. a, b

Krieger, D. and Brune, S.: MPI-ESM-LR1.2 decadal hindcast ensemble yearly German Bight storm activity, DOKU at DKRZ [data set], http://hdl.handle.net/21.14106/e14ca8b63ccb46f2b6c9ed56227a0ac097392d0d (last access: 18 July 2023), 2022b. a

Krieger, D., Krueger, O., Feser, F., Weisse, R., Tinz, B., and von Storch, H.: German Bight storm activity, 1897—2018, Int. J. Climatol., 41, E2159–E2177, https://doi.org/10.1002/joc.6837, 2021. a, b, c, d, e, f

Krieger, D., Brune, S., Pieper, P., Weisse, R., and Baehr, J.: Skillful decadal prediction of German Bight storm activity, Nat. Hazards Earth Syst. Sci., 22, 3993–4009, https://doi.org/10.5194/nhess-22-3993-2022, 2022. a, b, c, d, e, f, g, h, i, j

Krueger, O. and von Storch, H.: Evaluation of an Air Pressure–Based Proxy for Storm Activity, J. Climate, 24, 2612–2619, https://doi.org/10.1175/2011JCLI3913.1, 2011. a

Krueger, O., Feser, F., and Weisse, R.: Northeast Atlantic Storm Activity and Its Uncertainty from the Late Nineteenth to the Twenty-First Century, J. Climate, 32, 1919–1931, https://doi.org/10.1175/JCLI-D-18-0505.1, 2019. a

Kruschke, T., Rust, H. W., Kadow, C., Leckebusch, G. C., and Ulbrich, U.: Evaluating decadal predictions of northern hemispheric cyclone frequencies, Tellus A, 66, 22830, https://doi.org/10.3402/tellusa.v66.22830, 2014. a

Kruschke, T., Rust, H. W., Kadow, C., Müller, W. A., Pohlmann, H., Leckebusch, G. C., and Ulbrich, U.: Probabilistic evaluation of decadal prediction skill regarding Northern Hemisphere winter storms, Meteorol. Z., 25, 721–738, https://doi.org/10.1127/metz/2015/0641, 2016. a

Kunsch, H. R.: The Jackknife and the Bootstrap for General Stationary Observations, Ann. Stat., 17, 1217–1241, https://doi.org/10.1214/aos/1176347265, 1989. a

Liu, R. Y. and Singh, K.: Moving blocks jackknife and bootstrap capture weak dependence, in: Exploring the Limits of Bootstrap, edited by: LePage, R. and Billard, L., Wiley, 225–248, ISBN 978-0-471-53631-4, 1992. a

Lorenz, E. N.: Atmospheric Predictability as Revealed by Naturally Occurring Analogues, J. Atmos. Sci., 26, 636–646, https://doi.org/10.1175/1520-0469(1969)26<636:aparbn>2.0.co;2, 1969. a

Lu, H., Bracegirdle, T. J., Phillips, T., Bushell, A., and Gray, L.: Mechanisms for the Holton–Tan relationship and its decadal variation, J. Geophys. Res.-Atmos., 119, 2811–2830, https://doi.org/10.1002/2013jd021352, 2014. a

Marshall, A. G. and Scaife, A. A.: Impact of the QBO on surface winter climate, J. Geophys. Res., 114, D18110, https://doi.org/10.1029/2009jd011737, 2009. a

Mauritsen, T., Bader, J., Becker, T., Behrens, J., Bittner, M., Brokopf, R., Brovkin, V., Claussen, M., Crueger, T., Esch, M., Fast, I., Fiedler, S., Fläschner, D., Gayler, V., Giorgetta, M., Goll, D. S., Haak, H., Hagemann, S., Hedemann, C., Hohenegger, C., Ilyina, T., Jahns, T., Jimenéz-de-la Cuesta, D., Jungclaus, J., Kleinen, T., Kloster, S., Kracher, D., Kinne, S., Kleberg, D., Lasslop, G., Kornblueh, L., Marotzke, J., Matei, D., Meraner, K., Mikolajewicz, U., Modali, K., Möbis, B., Müller, W. A., Nabel, J. E. M. S., Nam, C. C. W., Notz, D., Nyawira, S.-S., Paulsen, H., Peters, K., Pincus, R., Pohlmann, H., Pongratz, J., Popp, M., Raddatz, T. J., Rast, S., Redler, R., Reick, C. H., Rohrschneider, T., Schemann, V., Schmidt, H., Schnur, R., Schulzweida, U., Six, K. D., Stein, L., Stemmler, I., Stevens, B., von Storch, J.-S., Tian, F., Voigt, A., Vrese, P., Wieners, K.-H., Wilkenskjeld, S., Winkler, A., and Roeckner, E.: Developments in the MPI-M Earth System Model version 1.2 (MPI-ESM1.2) and Its Response to Increasing CO2, J. Adv. Model. Earth Sy., 11, 998–1038, https://doi.org/10.1029/2018MS001400, 2019. a

Menary, M. B., Mignot, J., and Robson, J.: Skilful decadal predictions of subpolar North Atlantic SSTs using CMIP model-analogues, Environ. Res. Lett., 16, 064090, https://doi.org/10.1088/1748-9326/ac06fb, 2021. a

Moemken, J., Feldmann, H., Pinto, J. G., Buldmann, B., Laube, N., Kadow, C., Paxian, A., Tiedje, B., Kottmeier, C., and Marotzke, J.: The regional MiKlip decadal prediction system for Europe: Hindcast skill for extremes and user-oriented variables, Int. J. Climatol., 41, E1944–E1958, https://doi.org/10.1002/joc.6824, 2021. a

Neddermann, N.-C., Müller, W. A., Dobrynin, M., Düsterhus, A., and Baehr, J.: Seasonal predictability of European summer climate re-assessed, Clim. Dynam., 53, 3039–3056, https://doi.org/10.1007/s00382-019-04678-4, 2019. a

Peings, Y.: Ural Blocking as a Driver of Early-Winter Stratospheric Warmings, Geophys. Res. Lett., 46, 5460–5468, https://doi.org/10.1029/2019gl082097, 2019. a, b, c

Reick, C. H., Raddatz, T., Brovkin, V., and Gayler, V.: Representation of natural and anthropogenic land cover change in MPI-ESM, J. Adv. Model. Earth Sy., 5, 459–482, https://doi.org/10.1002/jame.20022, 2013. a

Renggli, D., Leckebusch, G. C., Ulbrich, U., Gleixner, S. N., and Faust, E.: The Skill of Seasonal Ensemble Prediction Systems to Forecast Wintertime Windstorm Frequency over the North Atlantic and Europe, Mon. Weather Rev., 139, 3052–3068, https://doi.org/10.1175/2011mwr3518.1, 2011. a

Riddle, E. E., Butler, A. H., Furtado, J. C., Cohen, J. L., and Kumar, A.: CFSv2 ensemble prediction of the wintertime Arctic Oscillation, Clim. Dynam., 41, 1099–1116, https://doi.org/10.1007/s00382-013-1850-5, 2013. a

Scaife, A. A., Arribas, A., Blockley, E., Brookshaw, A., Clark, R. T., Dunstone, N., Eade, R., Fereday, D., Folland, C. K., Gordon, M., Hermanson, L., Knight, J. R., Lea, D. J., MacLachlan, C., Maidens, A., Martin, M., Peterson, A. K., Smith, D., Vellinga, M., Wallace, E., Waters, J., and Williams, A.: Skillful long-range prediction of European and North American winters, Geophys. Res. Lett., 41, 2514–2519, https://doi.org/10.1002/2014gl059637, 2014a. a, b, c, d

Scaife, A. A., Athanassiadou, M., Andrews, M., Arribas, A., Baldwin, M., Dunstone, N., Knight, J., MacLachlan, C., Manzini, E., Müller, W. A., Pohlmann, H., Smith, D., Stockdale, T., and Williams, A.: Predictability of the quasi-biennial oscillation and its northern winter teleconnection on seasonal to decadal timescales, Geophys. Res. Lett., 41, 1752–1758, https://doi.org/10.1002/2013gl059160, 2014b. a

Scaife, A. A., Karpechko, A. Y., Baldwin, M. P., Brookshaw, A., Butler, A. H., Eade, R., Gordon, M., MacLachlan, C., Martin, N., Dunstone, N., and Smith, D.: Seasonal winter forecasts and the stratosphere, Atmos. Sci. Lett., 17, 51–56, https://doi.org/10.1002/asl.598, 2016. a

Schenk, F. and Zorita, E.: Reconstruction of high resolution atmospheric fields for Northern Europe using analog-upscaling, Clim. Past, 8, 1681–1703, https://doi.org/10.5194/cp-8-1681-2012, 2012. a

Schmidt, H. and von Storch, H.: German Bight storms analysed, Nature, 365, 791, https://doi.org/10.1038/365791a0, 1993. a

Schneck, R., Reick, C. H., and Raddatz, T.: Land contribution to natural CO2 variability on time scales of centuries, J. Adv. Model. Earth Sy., 5, 354–365, https://doi.org/10.1002/jame.20029, 2013. a

Siew, P. Y. F., Li, C., Sobolowski, S. P., and King, M. P.: Intermittency of Arctic–mid-latitude teleconnections: stratospheric pathway between autumn sea ice and the winter North Atlantic Oscillation, Weather Clim. Dynam., 1, 261–275, https://doi.org/10.5194/wcd-1-261-2020, 2020. a, b, c

Song, Y. and Robinson, W. A.: Dynamical Mechanisms for Stratospheric Influences on the Troposphere, J. Atmos. Sci., 61, 1711–1725, https://doi.org/10.1175/1520-0469(2004)061<1711:dmfsio>2.0.co;2, 2004. a

Stevens, B., Giorgetta, M., Esch, M., Mauritsen, T., Crueger, T., Rast, S., Salzmann, M., Schmidt, H., Bader, J., Block, K., Brokopf, R., Fast, I., Kinne, S., Kornblueh, L., Lohmann, U., Pincus, R., Reichler, T., and Roeckner, E.: Atmospheric component of the MPI–M Earth System Model: ECHAM6, J. Adv. Model. Earth Sy., 5, 146–172, https://doi.org/10.1002/jame.20015, 2013. a, b

Thompson, D. W. J. and Wallace, J. M.: The Arctic oscillation signature in the wintertime geopotential height and temperature fields, Geophys. Res. Lett., 25, 1297–1300, https://doi.org/10.1029/98gl00950, 1998. a

Van den Dool, H. M.: Searching for analogues, how long must we wait?, Tellus A, 46, 314–324, https://doi.org/10.1034/j.1600-0870.1994.t01-2-00006.x, 1994. a

Wang, J., Kim, H.-M., and Chang, E. K. M.: Interannual Modulation of Northern Hemisphere Winter Storm Tracks by the QBO, Geophys. Res. Lett., 45, 2786–2794, https://doi.org/10.1002/2017GL076929, 2018. a

Wilks, D. S.: On “Field Significance” and the False Discovery Rate, J. Appl. Meteorol. Clim., 45, 1181–1189, https://doi.org/10.1175/jam2404.1, 2006. a

Wilks, D. S.: Chapter 8 – Forecast Verification, in: Statistical Methods in the Atmospheric Sciences, edited by Wilks, D. S., vol. 100 of International Geophysics, Academic Press, 301–394, https://doi.org/10.1016/B978-0-12-385022-5.00008-7, 2011. a