the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Pre-disaster mapping with drones: an urban case study in Victoria, British Columbia, Canada

Maja Kucharczyk

Chris H. Hugenholtz

We report a case study using drone-based imagery to develop a pre-disaster 3-D map of downtown Victoria, British Columbia, Canada. This represents the first drone mapping mission over an urban area approved by Canada's aviation authority. The goal was to assess the quality of the pre-disaster 3-D data in the context of geospatial accuracy and building representation. The images were acquired with a senseFly eBee Plus fixed-wing drone with real-time kinematic/post-processed kinematic functionality. Results indicate that the spatial accuracies achieved with this drone would allow for sub-meter building collapse detection, but the non-gimbaled camera was insufficient for capturing building facades.

- Article

(6135 KB) - Full-text XML

- BibTeX

- EndNote

1.1 Background

Currently, 55 % of the global population resides in urban areas, and this is projected to increase to 68 % by 2050 (United Nations, 2018). Increasing global population and urbanization (particularly in vulnerable areas) are factors that can contribute to increased death and destruction by natural hazards like earthquakes and tropical cyclones. In addition to initiatives such as increased quality of construction, alarm systems, and proximity to rescue services, pre-disaster mapping can help increase a city's resilience to disasters (Pu, 2017). Maps combining vector layers, digital elevation models, and aerial/satellite imagery are powerful tools for mitigation and preparedness before a disaster strikes (Pu, 2017). Copernicus Emergency Management Service (Copernicus EMS), one of the main contributors of disaster management maps globally, has used pre-disaster data to produce thousands of reference maps (showing territories and assets), pre-disaster situation maps (showing hazard levels, evacuation plans, and modeling scenarios), and damage grading maps (showing the distribution and level of damage to buildings and infrastructure). Copernicus EMS generates damage grading maps by visually comparing pre- and post-disaster satellite imagery (Copernicus EMS, 2017). Nearby debris is used as a proxy for a building's structural damage, as building facades cannot be directly examined (Copernicus EMS, 2017).

The leading cause of death in an earthquake is building collapse (Moya et al., 2018). Remote sensing can potentially assist first responders in rapidly locating collapsed buildings to prioritize search and rescue efforts (Moya et al., 2018). However, clouds and access to satellite imagery can cause delays in analysis and preclude the traditional 2-D approach from being useful for search and rescue. Furthermore, partial building collapse, which can trap and kill victims, generates lower amounts of debris than complete collapse, so the dependence on debris as a proxy for collapse becomes less reliable. Research has shown that 2.5-D data can build upon the traditional 2-D approach and increase the reliability of collapse detection by observing elevation changes to buildings. Following the 2016 Kumamoto earthquake in Japan, Moya et al. (2018) detected collapsed buildings using pre- and post-earthquake light detection and ranging (lidar) digital surface models (DSMs). For each building, they calculated the average height difference between the DSMs and manually set a threshold value to detect collapse – this technique had a Cohen's kappa coefficient and overall accuracy of 0.80 and 93 %, respectively (Moya et al., 2018). Pre-event lidar data, however, can often be outdated, leading to false detections, or unavailable, especially in less-developed parts of the world. Post-event lidar data may be difficult to rapidly obtain. To address these operational challenges, drones are an alternative platform for acquiring 2.5-D and 3-D data, and when stored locally for emergency mapping, they can be used to rapidly acquire data. Drone-derived aerial imagery, when paired with structure-from-motion multi-view stereo image processing software, can be used to generate sub-decimeter resolution orthomosaics, DSMs, and photorealistic 3-D models in the form of colorized point clouds and textured meshes.

Drone-based mapping can also potentially support longer-term needs assessments and planning of repair and reconstruction activities by surveying building damage levels. The traditional 2-D approach with satellite imagery only provides information about building roofs and nearby debris, and previous research has shown that oblique perspectives of building facades are valuable for discerning between lower grades of building damage (Kakooei and Baleghi, 2017; Masi et al., 2017). Previous studies have conducted drone-based 3-D mapping of buildings following a disaster. The motivation is to complement ground-based building damage assessments: cataloging the exterior damage in 3-D can support the planning and prioritizing of subsequent, more-thorough ground-based assessments (Vetrivel et al., 2018) and the planning and monitoring of repair and reconstruction activities. Previous studies (e.g., Fernandez Galarreta et al., 2015; Cusicanqui et al., 2018) have reported that damage features such as deformations, cracks, debris, inclined walls, and partially collapsed roofs are identifiable in drone-based 3-D point clouds and mesh models. These findings demonstrate that drone 3-D data are capable of supporting post-disaster activities. However, previous studies have been limited to drone-based 3-D mapping of (i) a single building (Achille et al., 2015; Meyer et al., 2015); (ii) small, historic municipalities (Vetrivel et al., 2015, 2018; Dominici et al., 2017; Calantropio et al., 2018; Cusicanqui et al., 2018); or (iii) modern cities (but without focus on the quality of building representation in the 3-D data; Cusicanqui et al., 2018; Vetrivel et al., 2018). It is important to understand how drone-based 3-D data would reconstruct a cityscape, particularly with a grid-based survey to capture multiple city blocks in a single flight. This flight pattern would balance areal coverage with 3-D reconstruction quality. The dense spacing of buildings and the presence of high-rises in an urban scene create considerable potential for camera occlusion and may result in 3-D mesh defects such as inaccurate shapes, holes, and blurred textures (Wu et al., 2018).

In addition to issues with photogrammetry, it is challenging to collect drone data over dense, urban areas due to aviation regulations that were designed to protect public safety. As such, in a disaster context, drone data over cities have generally been collected in the post-disaster phases, when destruction is widespread and these data are in high demand. With historic emphasis on data collection in the post-disaster phases, it is important to not detract from pre-disaster mapping. Pre-disaster mapping not only provides baseline data from which to assess changes, but it is also a crucial exercise that enables emergency management actors to establish operational protocols to maximize the effectiveness of drones in emergencies. These protocols pertain to drone hardware and software, data collection, data processing, and data analysis.

Here, we present a case study of pre-disaster mapping with a drone in Victoria, British Columbia, Canada. Victoria has at least a 30 % probability of experiencing a significantly damaging earthquake in the next 50 years (AIR Worldwide, 2013). A 2016 report on the seismic vulnerability of Victoria presented a risk assessment for all buildings (13 330 buildings) in Victoria under various earthquake scenarios and levels of ground shaking (VC Structural Dynamics Ltd, 2016). The report concluded that 30 % of the buildings (3936 buildings) have a high seismic risk, meaning they have at least a 5 % probability of complete damage in a 50-year period (VC Structural Dynamics Ltd, 2016). This pre-disaster mapping exercise was undertaken for the City of Victoria's Emergency Management Division in partnership with GlobalMedic, a Canadian disaster relief charity. This was the first Transport Canada-approved drone mapping mission over a major Canadian city. We were restricted by regulations to use a specific platform: a 1.1 kg senseFly eBee fixed-wing drone. The overarching goal of this case study was to assess the quality of the drone data that we were able to obtain in a manner adhering to federal regulations.

1.2 Objectives

The first objective was to assess the geospatial accuracy of the drone data. Geospatial accuracy is important for change detection applications, as it relates to the quality of registration between pre- and post-disaster datasets. This was done by first assessing the vertical accuracy of the drone DSM using 47 ground-surveyed checkpoints. Then, a lidar DSM was subtracted from the drone DSM to visually assess the horizontal alignment of rooftops as a qualitative measure of horizontal accuracy. The second objective was to assess the quality of 3-D building representation. The only drone legally approved for urban overflight in Canada in 2018 presents challenges for 3-D mapping of cities, as it is a fixed-wing drone with a non-gimbaled camera. Research has shown that high camera tilt angles, which are not achievable with the regulatory platform for this flight, will result in higher reconstruction density (fewer data gaps) and higher precision of points on building facades than lower camera tilt angles (Rupnik et al., 2015). The quality assessment of 3-D building representation was done by visually assessing the drone 3-D textured mesh and using Google 3-D (i.e., “3-D Buildings” layer in Google Earth) as a reference for a building's appearance. Additionally, we applied a method previously used on post-disaster, drone-derived 3-D point clouds to quantify data gaps on sample building facades.

1.3 Regulatory background

Transport Canada is the aviation authority that regulates drone operations in Canadian airspace. The regulations in 2018 required case-by-case permission for drone flights in urban areas. Permission was sought by submitting an application for a Special Flight Operations Certificate. Obtaining this certificate required sufficient ground and flight training and documentation of standard operating procedures, emergency procedures, drone maintenance procedures, and more. Additionally, coordination with air traffic control (Nav Canada) was required to perform the flight, as downtown Victoria is within controlled airspace, with nearby airports, heliports, and seaplane bases causing high-density air traffic. In 2018, the only drone legally approved for urban overflight in Canada was the senseFly eBee, of which the “Plus” model was used for its higher georeferencing accuracy. The senseFly eBee Plus is a 1.1 kg fixed-wing drone with a 1.1 m wingspan and is made of lightweight expanded polypropylene foam, carbon fiber, and composite materials. At the time of the flight, the eBee Classic, SQ, and Plus models were the lightest on the list of Transport Canada-compliant drones, which included drones meeting federal safety and quality standards. For this flight, the senseFly eBee drone was approved by Transport Canada due to its light weight and ability to glide to a landing.

2.1 Flight area

The drone flight covered a 1 km2 area of downtown Victoria, British Columbia, Canada. The western half and eastern half of the flight area covered parts of the Historic Commercial District (HCD) and Central Business District (CBD), respectively, resulting in image capture of various building types and heights. The HCD contains an undulating streetscape with low- to mid-rise, brick- and stone-facade buildings alternating between one and five stories, including boutique hotels, heritage buildings, businesses, and offices (CoV, 2011). The CBD contains high-density, mid- to high-rise commercial and residential buildings (CoV, 2011). The building heights within the flight area ranged from 4 to 55 m, and street widths varied between 7 and 24 m.

2.2 Drone hardware and flight planning

The senseFly eBee Plus drone is equipped with real-time kinematic (RTK)/post-processed kinematic (PPK) functionality. Images were acquired with a senseFly Sensor Optimised for Drone Applications (SODA) red-green-blue (RGB) 20-megapixel camera. The RTK/PPK image georeferencing capabilities of the drone replaced the need for ground control points (GCPs), which are not practical to distribute and survey in an emergency (i.e., post-disaster) mapping context. It is important to note that, for pre-disaster mapping, GCPs should be used to maximize geospatial accuracy. Hugenholtz et al. (2016) demonstrated the improvement in DSM vertical accuracy when using a non-RTK/PPK senseFly eBee with GCPs compared to an RTK/PPK-enabled senseFly eBee without GCPs. For this pre-disaster mapping exercise in downtown Victoria, we chose to use RTK/PPK image georeferencing because this method is also applicable to post-disaster mapping. One of our objectives was to assess the geospatial accuracy of the pre-disaster data, which has implications for the use of RTK/PPK-enabled drones for post-disaster mapping and change detection applications.

The drone's PPK mode was used with correction data obtained from the Natural Resources Canada (NRCan) Canadian Active Control System (Albert Head reference station, 10 km from flight area). SenseFly eMotion (v3) software (senseFly, 2018) was used to plan the flight. The flight was grid-based, composed of orthogonal flight lines running nonparallel with streets (i.e., approximately 45∘ offset). The addition of perpendicular flight lines and the orientation of the grid were used to increase image coverage of building facades. The imagery frontal and lateral overlap were set to 75 %, and the flight altitude was 120 m a.g.l. (above ground level).

2.3 Drone image acquisition

The flight was conducted in the morning hours of 14 June 2018. A morning flight was chosen to coincide with low air traffic; however, the tradeoff for image acquisition is increased building shadow area and data occlusion compared to solar noon. The ground control station was set up on a parking garage rooftop within the flight area. The parking garage, surrounded by relatively low buildings and an open courtyard, allowed for unobstructed takeoff and landing, a visual line of sight, and a radio signal between the drone and the ground control station. A total of 828 images were captured. The median image pitch angle was 7.35∘ off nadir (3.55∘ interquartile range), with a minimum and maximum of 1.22 and 11.83∘, respectively.

2.4 Image processing

The images were processed using a high-performance computer (an Intel® Core™ i9-7900X CPU at 3.30 GHz with 64 GB RAM and an NVIDIA GeForce GTX 1080 GPU). First, senseFly eMotion software was used for PPK processing by incorporating raw global navigation satellite system (GNSS) observations from the reference station and the drone to refine the image geotags. The geotagged images were processed using Pix4Dmapper Pro (Pix4D; v4.3.27; Pix4D, 2018), which is structure-from-motion multi-view stereo (SfM–MVS) mapping software. SfM–MVS generally consists of the following steps (as outlined by Westoby et al., 2012). First, computer vision algorithms search through each image to identify “features”, which are pixel sets that are robust to changes in scale, illumination, and 3-D viewing angle. Next, the features are assigned unique “descriptors”, which allow for the same features to be identified across multiple images and for the images to be approximately aligned. This initial image alignment is iteratively optimized via bundle adjustment algorithms, the output of which is a sparse 3-D point cloud of feature correspondences. Multi-view stereo algorithms then densify the sparse point cloud, typically by 2 or more orders of magnitude. The dense point cloud is then used to generate a 3-D textured mesh, which is a triangulated surface that is textured using the original images. The dense point cloud is also used to generate a DSM. The DSM and images are used to generate an orthomosaic.

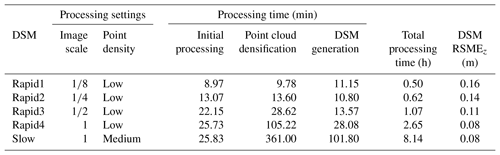

For the first objective of assessing the geospatial accuracy of the drone data in an urban context, five DSMs were generated. Each DSM had increasingly computationally intensive parameters, resulting in an increasingly higher processing time, ranging from 0.50 to 8.14 h. This has important implications for the applicability of a drone-based DSM for rapid building collapse detection, where time is a major factor for emergency responders. Four “rapid” DSMs were generated in Pix4D using values of 1∕8, 1∕4, 1∕2, and 1 for the image scale parameters (step 1: keypoints image scale and step 2: image scale) and using low density for the point cloud. One “slow” DSM was generated using a value of 1 for the image scale parameters, and optimal (medium) density for the point cloud. All five DSMs were generated using three minimum matches, noise filtering, “sharp” surface smoothing, and inverse distance weighting interpolation. For the second objective of assessing 3-D building representation, a 3-D textured mesh was generated in Pix4D using a value of 1 for the image scale parameters, optimal (medium) density for the point cloud, a minimum of three matches, and high resolution for the textured mesh. A medium-resolution mesh was also generated for comparison to the high-resolution mesh.

All Pix4D data outputs had a spatial reference of Universal Transverse Mercator (UTM) Zone 10N, North American Datum of 1983 (NAD83; Canadian Spatial Reference System; CSRS), using the Canadian Geodetic Vertical Datum of 2013 (CGVD2013) for orthometric heights relative to the Canadian Gravimetric Geoid model of 2013 (CGG2013, 2010.0 epoch). The DSMs were assessed for geospatial accuracy, while the point cloud and textured meshes were used to assess 3-D building representation in terms of geometry and texture.

2.5 Geospatial accuracy assessment

To be useful for change detection, such as generating a DSM of difference (DoD) for building collapse detection (e.g., Moya et al., 2018), the drone data must be geospatially accurate. Otherwise, misregistration of the drone data with pre- or post-event data may cause false detections. Therefore, the vertical accuracy of each drone DSM was assessed using 47 ground-surveyed checkpoints. The vertical accuracy assessment was conducted using recommendations from the 2015 American Society for Photogrammetry and Remote Sensing (ASPRS) Positional Accuracy Standards for Digital Geospatial Data (ASPRS, 2015). ASPRS (2015) recommends vertical checkpoints to be ground-surveyed and located on flat or uniformly sloped (≤10 % slope) open terrain away from vertical artifacts and abrupt elevation changes. The checkpoints used in this accuracy assessment were collected using a total station, and they represent sewer manhole covers located on paved roads throughout the study area. Each checkpoint z coordinate (zref) was subtracted from the corresponding drone DSM value (zdrone) to calculate errors (zdrone−zref). A Shapiro–Wilk test (α level of 0.05) and a visual inspection of the histogram, normal Q–Q plot, and box plot indicated that the errors followed a normal distribution. Therefore, vertical accuracy was calculated as the vertical root mean squared error (RMSEz) following Eq. (1):

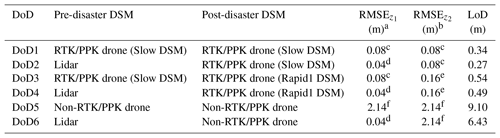

where zi(drone) is the value of the ith cell from the drone DSM, zi(ref) is the z coordinate of the corresponding checkpoint, and the total number of observations is represented by n (ASPRS, 2015). To assess the implications of the vertical accuracies, a level of detection (LoD) was calculated to determine the threshold elevation difference that can be detected using pre- and post-disaster DSMs with known RMSEz values, following Eq. (2):

where RMSE is the RMSEz of the pre-disaster DSM, RMSE is the RMSEz of the post-disaster DSM, and the multiplier, 3, represents the extreme tails of a normal probability distribution (Hugenholtz et al., 2013). The LoDs for several hypothetical DoDs were calculated. Each hypothetical DoD contained a different combination of pre- and post-disaster DSMs. The DSMs included the most rapid PPK-corrected drone DSM (processed in 0.50 h), the slow PPK-corrected drone DSM (processed in 8.14 h), a non-RTK/PPK drone DSM, and a lidar DSM. The RMSEz values for the PPK-corrected drone DSMs were experimentally derived in this study. The RMSEz value for the non-RTK/PPK drone DSM was experimentally derived by Hugenholtz et al. (2016). Based on 180 RTK GNSS vertical checkpoints from a gravel pit, Hugenholtz et al. (2016) calculated an RMSEz of 2.144 m for a non-RTK/PPK senseFly eBee (no GCPs). The RMSEz value for the lidar DSM was experimentally derived in this study. The lidar data were acquired in 2013 with a Leica ALS70-HP sensor from an average flight altitude and speed of 1360 m a.g.l. and 220 kn, respectively. The field of view and average swath width were 47∘ and 1240 m, respectively. The scan rate was 48.9 Hz, and the laser pulse rate was 370.6 kHz. The lidar point cloud was interpolated into a 0.31 m DSM in ESRI ArcMap (v10.5.1; ESRI, 2018) using inverse distance weighting interpolation and linear void fill. Using the 47 ground-surveyed checkpoints, the RMSEz of the lidar DSM was calculated.

To visually assess the horizontal accuracy of the drone data, a DoD was generated by subtracting the lidar DSM from the slow PPK-corrected drone DSM. The DoD was used to visually assess the horizontal alignment of roofs as a qualitative measure of horizontal accuracy.

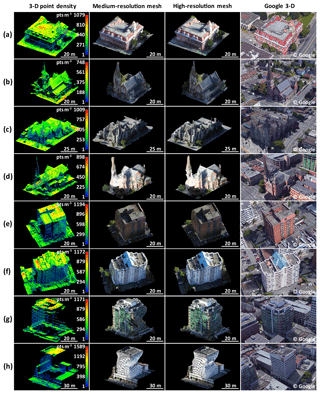

2.6 Assessment of building geometry and texture

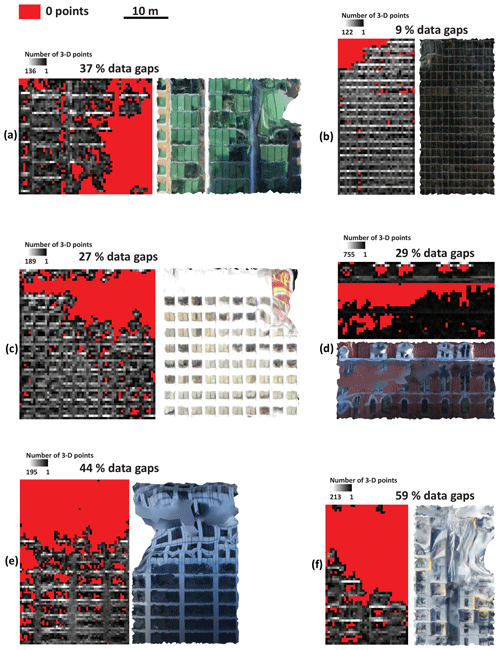

In addition to geospatial accuracy, we assessed the quality of building representation in the drone-derived 3-D data. This assessment has implications for the usability of the 3-D data for identifying damage to building roofs and facades. The medium- and high-resolution textured meshes were visually assessed for quality of building representation in terms of geometry and texture. Eight sample buildings ranging in geometrical complexity were segmented from each mesh using CloudCompare (v2.9.1; CloudCompare, 2018) and were visually compared. Google 3-D (i.e., Google Earth layer “3-D Buildings”) served as a reference for building appearance (Google, 2018). The Google 3-D layer was photogrammetrically derived using nadir and 45∘ aerial imagery that was collected with a multi-camera system in 2014. To support the visual assessment, each sample building was segmented from the dense point cloud, and each building point cloud was colored by 3-D point density using CloudCompare. To further investigate geometrical and textural distortions within the mesh, the dense point cloud was used to quantify data gaps on building facades (i.e., regions of facades without points). The procedure generally followed Cusicanqui et al. (2018), who assessed the completeness of drone-based point clouds of post-earthquake study areas in Taiwan and Italy. Using CloudCompare, six sample facades were segmented from the dense point cloud. The Rasterize tool was used to project the points of each segmented facade onto a 0.50 m grid, with the projection plane parallel to the facade. Then, a 0.50 m raster was generated, showing the number of 3-D points in each cell. For each raster, the percentage of facade data gaps was calculated by dividing the number of empty cells by the total number of cells. To support the data gap assessment, the sample facades were also segmented from the high-resolution mesh using CloudCompare.

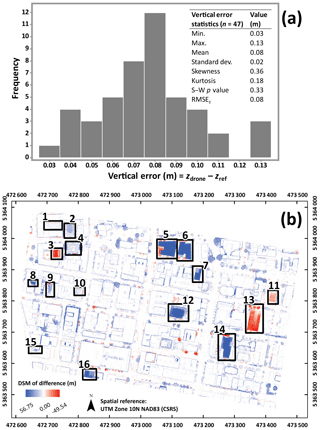

3.1 Geospatial accuracy of drone DSM

The vertical errors of the PPK-corrected drone DSMs and the lidar DSM were calculated using 47 ground-surveyed checkpoints located on sewer manhole covers. For the slow PPK-corrected drone DSM, errors ranged from 0.03 to 0.13 m (Fig. 1a). The mean vertical error was 0.08 m, with a standard deviation of 0.02 m (Fig. 1a), demonstrating that the drone DSM tended to overestimate the elevation of the ground surface. Table 1 shows the RMSEz values of the slow PPK-corrected drone DSM (Slow) and four rapid PPK-corrected drone DSMs (Rapid1–4). RMSEz decreased as total processing time increased (Table 1). The DSM generated in the least amount of time (Rapid1; 0.50 h) had an RMSEz of 0.16 m, which is 0.08 m higher than the RMSEz for the Slow DSM, generated in 8.14 h (Table 1). The non-RTK/PPK drone DSM and lidar DSM had RMSEz values of 2.144 m and 0.04 m, respectively.

Figure 1Geospatial accuracy results for the slow PPK-corrected drone DSM: (a) a vertical error histogram with statistics and the Shapiro–Wilk (S–W) p value and (b) the DSM of difference, calculated by subtracting DSMlidar from DSMdrone. Blue tints represent elevation overestimations and red tints represent elevation underestimations by DSMdrone. Buildings with major contiguous DSM differences are boxed in black. The causes of these contiguous DSM differences are due to changes during the 5 years between the lidar (2013) and drone (2018) data acquisitions, including new construction (1, 2, 4–10, 12, 14–16), structure removal (3, 5, 11), and parking lot excavation (13).

Table 1DSMs generated with different processing settings in Pix4D (v4.3.27), with processing time (minutes) for each step, the total processing time (hours), and the resulting RMSEz. The processing was done using a high-performance computer (an Intel® Core™ i9-7900X CPU at 3.30 GHz with 64 GB RAM and an NVIDIA GeForce GTX 1080 GPU).

Table 2 shows hypothetical DoDs, each generated with a different combination of pre- and post-disaster DSMs, and their resulting LoDs from Eq. (2). For each hypothetical DoD, the corresponding LoD value indicates that any elevation difference between −LoD and +LoD is likely due to error and cannot be interpreted as real. DoDs generated with one or more DSMs derived from a non-RTK/PPK drone (DoD5 and DoD6) had LoDs of 6.43 and 9.10 m (Table 2). For LoDs attributed to the use of non-RTK/PPK drones, buildings shorter than the LoDs cannot be assessed for collapse, and for assessable buildings, only DoD values exceeding the LoDs are likely to correspond to real collapse. The 6.43 and 9.10 m LoDs exceed the typical height of a single building story, suggesting that DoDs generated with non-RTK/PPK drones cannot be reliably used to detect partial collapse. Conversely, DoDs generated with one or more DSMs from an RTK/PPK drone (DoD1–4) had LoDs ranging from 0.27 to 0.54 m, suggesting that these DoDs can be reliably used to detect partial collapse (Table 2). This includes DoD3 and DoD4, both generated using a rapid PPK-corrected post-event drone DSM (Rapid1; Table 2). The use of the lowest image scale value (1∕8) and lowest point density in Pix4D to generate the Rapid1 DSM in 0.50 h (Table 1) retained sub-meter LoDs (0.49–0.54 m) (Table 2).

Table 2Hypothetical DoDs calculated using different DSM combinations. The LoD for each DoD was calculated using Eq. (2).

a RMSEz of the pre-disaster DSM. b RMSEz of the post-disaster DSM. c RMSEz of Slow DSM, as shown in Table 1. d RMSEz of the lidar DSM. e RMSEz of Rapid1 DSM, as shown in Table 1. f RMSEz of a DSM generated using a non-RTK/PPK senseFly eBee (no GCPs) from Hugenholtz et al. (2016).

The horizontal accuracy of the slow PPK-corrected drone DSM was visually assessed by calculating a DoD with the lidar DSM (Fig. 1b). The DoD shows blue tints for elevation overestimations and red tints for elevation underestimations by the drone DSM (Fig. 1b). A 2013 satellite image was viewed in Google Earth and compared to the drone orthomosaic to determine buildings common to both datasets. Figure 1b identifies 16 buildings with large regions of contiguous DSM differences. These contiguous DSM differences are due to changes that occurred between the 2013 lidar and 2018 drone data acquisitions, such as new construction, structure removal, and parking lot excavation (Fig. 1b). For the rest of the DoD, the red and blue cells mostly correspond to changes in vegetation and inconsistencies in building footprint edges between the drone and lidar DSMs (Fig. 1b). Building outlines appear mostly blue and do not appear weighted more heavily in one direction (Fig. 1b), suggesting no major horizontal offset of the drone DSM relative to the lidar DSM. With a 0.31 m average point spacing, it is possible that the lidar point cloud did not sample roof edges, resulting in slightly smaller building footprints in the lidar DSM than the drone DSM. Building footprint edge differences could also be due to inaccurate geometry from drone-based photogrammetry.

3.2 Building representation: mesh resolution and data gap assessment

The appearance of buildings varied considerably between the medium- and high-resolution 3-D meshes. Figure 2 shows eight sample buildings represented by the dense point cloud (colored by 3-D point density), both meshes, and Google 3-D as a reference. Both meshes were generated using the settings described in Sect. 2.4, with only the mesh resolution setting varying. For each building, the point density is higher on roofs than facades, and data gaps (i.e., regions of zero points) are visible within facades (Fig. 2). The medium-resolution mesh has a visibly poorer reconstruction of building geometry and, subsequently, more deformations in texture than the high-resolution mesh (Fig. 2). This was expected, as each medium-resolution building contains only 4 %–5 % of the vertices and faces of its high-resolution counterpart. Figure 2a–d show heritage buildings with complex geometry: Victoria City Hall (Fig. 2a), St. John the Divine Anglican Church (Fig. 2b), Alix Goolden Performance Hall (Fig. 2c), and St. Andrew's Cathedral (Fig. 2d). Smaller architectural features common to these heritage buildings, such as gabled entrances, dormer windows, conical roofs, spires, and towers are better resolved in the high-resolution mesh (Fig. 2a–d). For these buildings, as well as buildings with simpler geometry (Fig. 2e–h), the high-resolution mesh shows higher linearity along the edges of facades, roofs, and windows. For the high-rise buildings (Fig. 2e–h), facades with widespread data gaps in the point cloud appear to protrude inward and outward in the meshes, and they have severe textural distortions. For generally planar facades with regular sampling (e.g., the front-facing facades in Fig. 2e and f), the apparent geometrical and textural differences between the medium- and high-resolution meshes are less prominent. The 95 %–96 % lower density of vertices and faces in the medium-resolution mesh appears more robust regarding geometrical and textural distortions for buildings with simpler, planar geometry than those with complex geometry, provided there is adequate sampling. However, as demonstrated by the high-rise buildings (Fig. 2e–h), facades with widespread data gaps have severe distortions, regardless of mesh resolution.

Figure 2Sample buildings segmented from the dense point cloud (colored by 3-D point density), medium-resolution mesh, and high-resolution mesh. Both meshes were generated using identical input imagery and processing settings, except for the mesh resolution setting. Google 3-D is shown as a reference for building appearance (© Google Earth; Google, 2018).

The point clouds in Fig. 2 show that roofs were more densely and regularly sampled than facades, and some facades contained widespread gaps that resulted in severe distortions in the meshes. To further assess facade data gaps, particularly partial data gaps, six facades were segmented from the dense point cloud and high-resolution mesh. The 0.50 m point density raster and high-resolution mesh segmentation are shown for each facade in Fig. 3. Data gaps, represented by red cells, encompass 9 %–59 % of the facades (Fig. 3). For each facade, large regions of contiguous red cells in the point density raster appear attributed to distortions in the mesh (i.e., stretched texture and inwardly protruding geometry).

4.1 Key lessons: drone geospatial accuracy and up-to-date pre-disaster DSMs

For building collapse detection (e.g., Moya et al., 2018), drones can provide post-event DSMs that can be differenced with pre-event DSMs derived from lidar and other methods. However, there are geospatial accuracy requirements to minimize errors caused by the misregistration of pre- and post-event DSMs. As such, we conducted a vertical accuracy assessment of each PPK-corrected drone DSM and the lidar DSM using 47 ground-surveyed checkpoints. The RMSEz values were then used to calculate LoDs for hypothetical DoDs generated from different combinations of pre- and post-disaster DSMs. For DoDs attributed to the use of non-RTK/PPK drones, the LoDs exceeded the typical height of a single building story, suggesting that DoDs generated with non-RTK/PPK drones cannot be reliably used to detect partial collapse (Table 2). Conversely, DoDs generated with one or more DSMs from an RTK/PPK drone (DoD1–4) had LoDs ranging from 0.27 to 0.54 m, suggesting that these DoDs can be reliably used to detect partial collapse (Table 2). These results demonstrate that, in the absence of GCPs, RTK/PPK-enabled drones are required for reliable building collapse detection, and rapid processing settings can be used.

Furthermore, the DoD calculated by differencing the lidar DSM with the slow PPK-corrected drone DSM showed buildings with large regions of contiguous DSM differences due to changes that occurred between the 2013 lidar and 2018 drone data acquisitions, such as new construction, structure removal, and parking lot excavation (Fig. 1b). This demonstrates that, for building collapse detection, it is necessary to maintain an up-to-date pre-disaster DSM to avoid false detections. Victoria, like most urban areas, has a dynamic downtown core with rezoning and new building construction or renovations to help accommodate significant population growth forecasted over the next 20 years (CoV, 2011). Routine updates to urban DSMs could help discriminate real, disaster-induced changes to buildings from those associated with construction or renovation activities. Up-to-date DSMs can be derived from many methods, including airborne photogrammetry and laser scanning. To reduce costs and time associated with continuously updating a pre-disaster DSM, future research should focus on developing methodologies to facilitate the distinction between construction- or renovation-modified and disaster-damaged buildings in a DoD.

4.2 Key lessons: drone mesh resolution and imaging platform

From an image processing standpoint, it was shown in Sect. 3.2 that mesh geometry and texture were improved considerably from a medium-resolution to a high-resolution mesh (Fig. 2). The high-resolution mesh required more processing time, including subsetting the project into two, but these improvements justify the added time for virtual 3-D damage assessment applications. For image collection, the deformed geometry and texture of buildings (Figs. 2 and 3) could be improved by collecting highly oblique images of facades in addition to nadir images of roofs and ground features. The median 7∘ camera pitch angle used in this case study was likely insufficient for capturing vertical and near-vertical faces, resulting in large point cloud data gaps and geometrical and textural distortions in the 3-D mesh that could be mistaken for damage (Figs. 2 and 3). Using a higher camera angle (e.g., 30–45∘ off nadir) could make important improvements. Rupnik et al. (2015) found that increasing the camera tilt angle resulted in a higher point density on building facades and higher 3-D precision of points and that the addition of oblique images to a nadir image set increased the vertical accuracy of points. This suggests that different hardware is required for mapping municipalities in 3-D with small drones. Options include multi-rotor drones with gimbaled cameras that are capable of highly oblique image capture. However, in the near term, we suspect multi-rotor drones may be more difficult for urban overflight than fixed-wing drones from a regulatory perspective. Most small multi-rotor drones have fixed-pitch rotors, which, in the event of power loss, do not allow for the drone to enter autorotation to substantially slow the descent (Perritt Jr. and Sprague, 2017). Fixed-wing drones, on the other hand, are able to glide after losing power (Perritt Jr. and Sprague, 2017). Therefore, a potential solution is to use a lightweight fixed-wing drone with a camera that tilts for oblique image capture. One commercially available option is the senseFly eBee X drone with a senseFly SODA 3-D RGB camera, which captures one nadir image and two laterally oblique images per image waypoint. The eBee X model became available in September 2018, after the data capture in this study. Lightweight RTK/PPK-enabled multi-rotor drones may be more affordable than the senseFly eBee X with a SODA 3-D camera, but they typically have a shorter battery life and subsequently lower areal coverage than fixed-wing drones.

It is important to note that a higher camera angle is not a panacea: higher camera tilt angles result in larger occlusions due to surrounding buildings, which contribute to lower point density on lower parts of facades (Rupnik et al., 2015). Moreover, point cloud gaps will persist on facades due to several factors, including (i) occlusions caused by surrounding buildings, facade protrusions, and other objects; (ii) insufficient texture; (iii) highly reflective surfaces like glass; and (iv) poor image quality (Fonstad et al., 2013; Alsadik et al., 2014). Another potential solution is to obtain images of building facades from the ground. Wu et al. (2018) showed that drone-derived textured meshes of urban study areas in Germany and Hong Kong were improved with the integration of ground-based images. The meshes had increased geometric accuracy and improved texture (Wu et al., 2018). However, potential challenges in obtaining terrestrial images include added time, safety concerns, and limited access, especially in a post-disaster context.

4.3 Applicability to other urban areas

The data acquisition methods used in this study will need to be adapted to fit the conditions of different urban areas. For example, flight altitude will need to be adjusted to maintain a safe vertical clearance from the tallest building. If the terrain in the area is sloped, elevation data should be input into the flight planning software to keep the flight altitude constant. A grid of flight lines is recommended, although its orientation and image overlap will vary depending on factors such as building layout and density. Cities with taller buildings and greater variation in building height than Victoria may be difficult to map in 3-D with a drone, as the high flight altitude may preclude full capture of facades. In a post-disaster context, a takeoff and landing location may be difficult to locate and access due to widespread destruction. Weather conditions such as high winds and rain following storm events may pose challenges to the flying ability of lightweight drones. Atmospheric conditions such as haze and smoke also limit optical sensors in imaging destruction.

The applicability of this study to other urban areas may be further challenged by legal restrictions in different national airspaces. For instance, a 2017 evaluation of drone regulations in 19 countries showed that 12 countries (including Canada, Italy, and China) prohibited drone flights over urban areas, and some countries also required minimum horizontal distances to be kept from these areas (Stöcker et al., 2017). Furthermore, 17 of 19 countries specified maximum flight altitudes, which ranged from 90 to 152 m a.g.l. (Stöcker et al., 2017). Additional operational limitations include minimum horizontal distances from airports and heliports (Stöcker et al., 2017). For urban overflight outside of regulatory operational limitations, special approval could be an option, although it is not guaranteed. Special approval would be required, for example, in cities where building heights exceed the maximum flight altitude or cities with interior airports or heliports, which is common in most major urban areas. As in this case study, special approval and multi-stakeholder coordination are likely requirements for drone-based mapping in other urban areas. In summary, the applicability of this study to other cities will depend on the urban topography, weather and atmospheric conditions, and drone regulations.

We presented a case study of drone-based pre-disaster mapping in downtown Victoria, British Columbia, Canada. The objectives were to assess the quality of the data in terms of geospatial accuracy and 3-D building representation. Using 47 ground-surveyed checkpoints, the RMSEz of the drone-derived DSM was 0.08 m. The DSM of difference (DoD = DSMdrone − DSMlidar) showed complete roof overlap, suggesting adequate horizontal accuracy for change detection applications. For building collapse detection, results suggest that drones with RTK/PPK image georeferencing capabilities and up-to-date pre-disaster DSMs are required to avoid false detections. Furthermore, image processing using rapid settings, as opposed to slow settings, reduced processing time from 8.14 to 0.50 h, increased DSM RMSEz from 0.08 to 0.16 m, and increased DoD LoD from 0.34 to 0.54 m. Though processing times were specific to our computing hardware, these differences demonstrate that rapid processing is capable of quickly generating DSMs that can reliably detect sub-meter building collapse. Conversely, hypothetical DoDs derived from one or more non-RTK/PPK drone DSMs had LoDs too high (i.e., >6 m) to reliably detect partial building collapse. These results suggest that RTK/PPK-enabled drones and rapid image processing are most suitable for rapid building collapse detection with drones.

For building damage assessment with drone-derived 3-D textured meshes, it was shown that a high-resolution mesh, containing 95 %–96 % more vertices and faces than a medium-resolution mesh, visually improved building geometry and texture, especially for heritage buildings with complex geometries and small architectural features. However, neither mesh resolution was able to cope with large point cloud gaps on building facades. These data gaps were shown to correspond with severely distorted building geometry and texture in the mesh. Therefore, for future drone-based pre- and post-disaster 3-D mapping of municipalities, different hardware will be required. The ability to capture highly oblique images is paramount to reconstructing building facades. Options include a multi-rotor drone with a gimbaled camera. However, in the near term, lightweight multi-rotor drones may be more challenging for large-area mapping missions in major urban areas due to regulatory restrictions. Therefore, we suggest a follow-up study using a fixed-wing drone with oblique image acquisition capabilities.

The data used in this study are not publicly available due to privacy issues.

CHH conceptualized the research goals; MK and CHH designed the methodology; MK executed the methodology; MK prepared the manuscript; and CHH reviewed and edited the manuscript.

The authors declare that they have no conflict of interest.

We would like to thank the City of Victoria for helping to coordinate the drone data acquisition and providing access to the drone and lidar data, GlobalMedic for helping to design and coordinate the drone data acquisition, InDro Robotics and Global UAV Technologies for acquiring the drone data, Nav Canada for coordinating the drone flights with the harbor control tower and pilots, and Transport Canada for granting approval for the drone operations. We gratefully acknowledge the support of Alberta Innovates and Alberta Advanced Education. We also appreciate the helpful comments and suggestions from the anonymous reviewers and editor.

This paper was edited by Kai Schröter and reviewed by three anonymous referees.

Achille, C., Adami, A., Chiarini, S., Cremonesi, S., Fassi, F., Fregonese, L., and Taffurelli, L.: UAV-based photogrammetry and integrated technologies for architectural applications—methodological strategies for the after-quake survey of vertical structures in Mantua (Italy), Sensors, 15, 15520–15539, https://doi.org/10.3390/s150715520, 2015.

AIR Worldwide: Study of Impact and the Insurance and Economic Cost of a Major Earthquake in British Columbia and Ontario/Quebec, The Insurance Bureau of Canada, AIR Worldwide, Boston, MA, USA, 2013.

Alsadik, B., Gerke, M., and Vosselman, G.: Visibility analysis of point cloud in close range photogrammetry, in: ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, ISPRS Technical Commission V Symposium, 23–25 June 2014, Riva del Garda, Italy, 9–16, 2014.

ASPRS – American Society for Photogrammetry and Remote Sensing: ASPRS Positional Accuracy Standards for Digital Geospatial Data, Photogramm. Eng. Remote Sens., 81, 1–26, https://doi.org/10.14358/PERS.81.3.A1-A26, 2015.

Calantropio, A., Chiabrando, F., Sammartano, G., Spanò, A., and Losè, L. T.: UAV Strategies Validation and Remote Sensing Data for Damage Assessment in Post-Disaster Scenarios, in: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-3/W4, 2018 GeoInformation For Disaster Management (Gi4DM), 18–21 March 2018, Istanbul, Turkey, 121–128, 2018.

CloudCompare: CloudCompare (version 2.9.1) [Software], available at: http://www.cloudcompare.org/, last access: 29 October 2018.

Copernicus EMS – Copernicus Emergency Management Service: How the Copernicus Emergency Management Service supported responses to major earthquakes in Central Italy, available at: http://emergency.copernicus.eu/mapping/ems/how-copernicus-emergency-management-service-supported (last access: 29 October 2018), 2017.

CoV – City of Victoria: Downtown Core Area Plan, City of Victoria Sustainable Planning and Community Development Department, Victoria, BC, Canada, 2011.

Cusicanqui, J., Kerle, N., and Nex, F.: Usability of aerial video footage for 3-D scene reconstruction and structural damage assessment, Nat. Hazards Earth Syst. Sci., 18, 1583–1598, https://doi.org/10.5194/nhess-18-1583-2018, 2018.

Dominici, D., Alicandro, M., and Massimi, V.: UAV photogrammetry in the post-earthquake scenario: case studies in L'Aquila, Geomatics, Nat. Hazards Risk, 8, 87–103, https://doi.org/10.1080/19475705.2016.1176605, 2017.

ESRI: ArcMap (version 10.5.1) [Software], Environmental Systems Research Institute, Redlands, CA, 2018.

Fernandez Galarreta, J., Kerle, N., and Gerke, M.: UAV-based urban structural damage assessment using object-based image analysis and semantic reasoning, Nat. Hazards Earth Syst. Sci., 15, 1087–1101, https://doi.org/10.5194/nhess-15-1087-2015, 2015.

Fonstad, M. A., Dietrich, J. T., Courville, B. C., Jensen, J. L. and Carbonneau, P. E.: Topographic structure from motion: A new development in photogrammetric measurement, Earth Surf. Proc. Land., 38, 421–430, https://doi.org/10.1002/esp.3366, 2013.

Google: Google Earth (version 7.3.2) [Software], Google, Mountain View, CA, 2018.

Hugenholtz, C. H., Whitehead, K., Brown, O. W., Barchyn, T. E., Moorman, B. J., LeClair, A., Riddell, K., and Hamilton, T.: Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model, Geomorphology, 194, 16–24, https://doi.org/10.1016/j.geomorph.2013.03.023, 2013.

Hugenholtz, C., Brown, O., Walker, J., Barchyn, T., Nesbit, P., Kucharczyk, M., and Myshak, S.: Spatial accuracy of UAV-derived orthoimagery and topography: Comparing photogrammetric models processed with direct geo-referencing and ground control points, Geomatica, 70, 21–30, https://doi.org/10.5623/cig2016-102, 2016.

Kakooei, M. and Baleghi, Y.: Fusion of satellite, aircraft, and UAV data for automatic disaster damage assessment, Int. J. Remote Sens., 38, 2511–2534, https://doi.org/10.1080/01431161.2017.1294780, 2017.

Masi, A., Chiauzzi, L., Santarsiero, G., Liuzzi, M., and Tramutoli, V.: Seismic damage recognition based on field survey and remote sensing: general remarks and examples from the 2016 Central Italy earthquake, Nat. Hazards, 86, 193–195, https://doi.org/10.1007/s11069-017-2776-8, 2017.

Meyer, D., Hess, M., Lo, E., Wittich, C. E., Hutchinson, T. C., and Kuester, F.: UAV-based post disaster assessment of cultural heritage sites following the 2014 South Napa Earthquake, 2015 Digit. Herit. Int. Congr. Digit. Herit. 2015, 421–424, https://doi.org/10.1109/DigitalHeritage.2015.7419539, 2015.

Moya, L., Yamazaki, F., Liu, W., Chiba, T. and Mas, E.: Detection of Collapsed Buildings Due To the 2016 Kumamoto Earthquake From Lidar Data, Nat. Hazards Earth Syst. Sci., 18, 65–78, https://doi.org/10.5194/nhess-18-65-2018, 2018.

Perritt Jr., H. H. and Sprague, E. O.: Domesticating Drones: The technology, law, and economics of unmanned aircraft, Routledge, New York, USA, 2017.

Pix4D: Pix4Dmapper (version 4.3.27) [Software], Pix4D, Lausanne, Switzerland, 2018.

Pu, R.: A Special Issue of Geosciences: Mapping and Assessing Natural Disasters Using Geospatial Technologies, Geosciences, 7, 1–2, https://doi.org/10.3390/geosciences7010004, 2017.

Rupnik, E., Nex, F., Toschi, I., and Remondino, F.: Aerial multi-camera systems: Accuracy and block triangulation issues, ISPRS J. Photogramm. Remote Sens., 101, 233–246, 2015.

senseFly: eMotion (version 3) [Software], senseFly, Cheseaux-sur-Lausanne, Switzerland, 2018.

Stöcker, C., Bennett, R., Nex, F., Gerke, M., and Zevenbergen, J.: Review of the current state of UAV regulations, Remote Sens., 9, 33–35, https://doi.org/10.3390/rs9050459, 2017.

United Nations: World Urbanization Prospects: The 2018 Revision [key facts], Population Division, United Nations, New York, USA, 2018.

VC Structural Dynamics Ltd: Executive Summary, Citywide Seismic Vulnerability Assessment of The City of Victoria, The Corporation of the City of Victoria, Victoria, BC, Canada, 2016.

Vetrivel, A., Gerke, M., Kerle, N., and Vosselman, G.: Identification of damage in buildings based on gaps in 3D point clouds from very high resolution oblique airborne images, ISPRS J. Photogram. Remote Sens., 105, 61–78, https://doi.org/10.1016/j.isprsjprs.2015.03.016, 2015.

Vetrivel, A., Gerke, M., Kerle, N., Nex, F., and Vosselman, G.: Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning, ISPRS J. Photogram. Remote Sens., 140, 45–59, https://doi.org/10.1016/j.isprsjprs.2017.03.001, 2018.

Westoby, M. J., Brasington, J., Glasser, N. F., Hambrey, M. J., and Reynolds, J. M.: “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications, Geomorphology, 179, 300–314, https://doi.org/10.1016/j.geomorph.2012.08.021, 2012.

Wu, B., Xie, L., Hu, H., Zhu, Q., and Yau, E.: Integration of aerial oblique imagery and terrestrial imagery for optimized 3D modeling in urban areas, ISPRS J. Photogram. Remote Sens., 139, 119–132, https://doi.org/10.1016/j.isprsjprs.2018.03.004, 2018.